Designlet: Deploy NSX Advanced Load Balancer with Inline Topology on VMC on AWS

Introduction

VMware's NSX Advanced Load Balancer (NSX ALB) is a versatile solution that offers load balancing, web application firewall, and application analytics capabilities across on-premises data centers and multiple clouds. By leveraging a software-defined platform, NSX ALB ensures that applications are delivered reliably and securely, with consistent performance across bare metal servers, virtual machines, and containers.

NSX ALB provides two main topologies for load balancing: one-arm and inline topology. The one-arm topology is generally preferred. In the one-arm topology, NSX ALB is deployed alongside the existing network topology, and traffic is directed to the NSX ALB Service Engines (SE) via a virtual IP address. On the other hand, In the inline topology, NSX ALB acts as the default gateway for backend application servers, and all traffic from the backend servers to other networks passes through the NSX ALB SEs.

Since VMware Cloud on AWS SDDC version 1.9, NSX Advanced Load Balancer has been available as a customer-managed solution. In this setup, the NSX ALB controllers and SEs are manually deployed as VMs through vCenter in a VMware Cloud on AWS SDDC. Several VMware blogs and techzone articles have been released about deploying NSX ALB on VMware Cloud on AWS. For further information, please refer to the following links:

- Build Load Balancing Service in VMC on AWS with NSX ALB

- Deploy and Configure NSX Advanced Load Balancer on VMC on AWS

Initially, only a one-arm deployment topology was supported. However, the inline topology is now supported with the introduction of additional Tier-1 gateways in VMware Cloud on AWS SDDC v1.18. This guide offers an overview of the considerations for deploying an inline topology NSX ALB on VMware Cloud on AWS.

Summary and Considerations

| Use cases |

|

| Pre-requisites | Understand VMware Cloud on AWS SDDC network architecture, NSX ALB architecture and deployment topologies: inline topology and one-arm topology. |

| General consideration | The NSX ALB Inline topology can be useful in certain scenarios, but it may not always be the optimal choice. One typical use case for the Inline topology is preserving the client's IP address. However, if the backend applications support alternative options such as X-Forwarded-For for HTTP/HTTPS traffic or Proxy Protocol for TCP traffic, inline topology is not required to retain the client IP. |

| Performance Considerations | To support the NSX ALB inline topology, all traffic into or out of the segments being served by the NSX ALB load balancer must pass through the SEs, whether it is load balanced traffic or not. This creates additional load on the SEs, which may therefore require additional computing resources to handle non-load-balanced traffic, such as backup and application-to-DB traffic. Therefore, it's important to ensure that the SEs have sufficient resources to handle both types of traffic to avoid any performance issues. CPU and memory reservation is a crucial technique administrators can use to ensure optimal SE performance. As performance requirements increase, CPU and memory reservations can be applied to the SEs to provide them with guaranteed resources from the underlying host. Reservations can be increased all the way to 100% to provide the most consistent performance. In addition to CPU and memory reservation, adopting newer hardware, such as the i4i node, can provide better performance and reliability for SEs. Newer hardware often comes with advanced features and specifications that can enhance the performance of SEs, providing better response times, throughput, and reliability. VMware Cloud on AWS provides several storage options, including full flash vSAN, AWS FSx for NetApp ONTAP, and Flex storage. Among these, vSAN with a RAID1 (with Dual-Site mirroring for a multi-AZ VMware Cloud on AWS SDDC) storage policy is recommended for deploying Service Engines as it provides the best write performance, which is required for collecting real-time metrics for virtual services. |

| Cost Implications | The inline topology for NSX ALB only supports Active-Standby HA mode, which may require larger SEs or extra SEs to achieve the desired performance. |

| Documentation Reference | Designlet: VMware Cloud on AWS Static Routing on Multiple CGWs (T1s) Designlet: Understanding VMware Cloud on AWS Network Performance VMware Cloud on AWS: SDDC Network Architecture NSX ALB Architectural Overview Default Gateway (IP Routing on Avi SE) Deploy and Configure NSX Advanced Load Balancer on VMC on AWS |

| Last Updated | Apr 2023 |

Planning and Implementation

The following sections provide the details for planning and implementing an NSX ALB inline topology deployment.

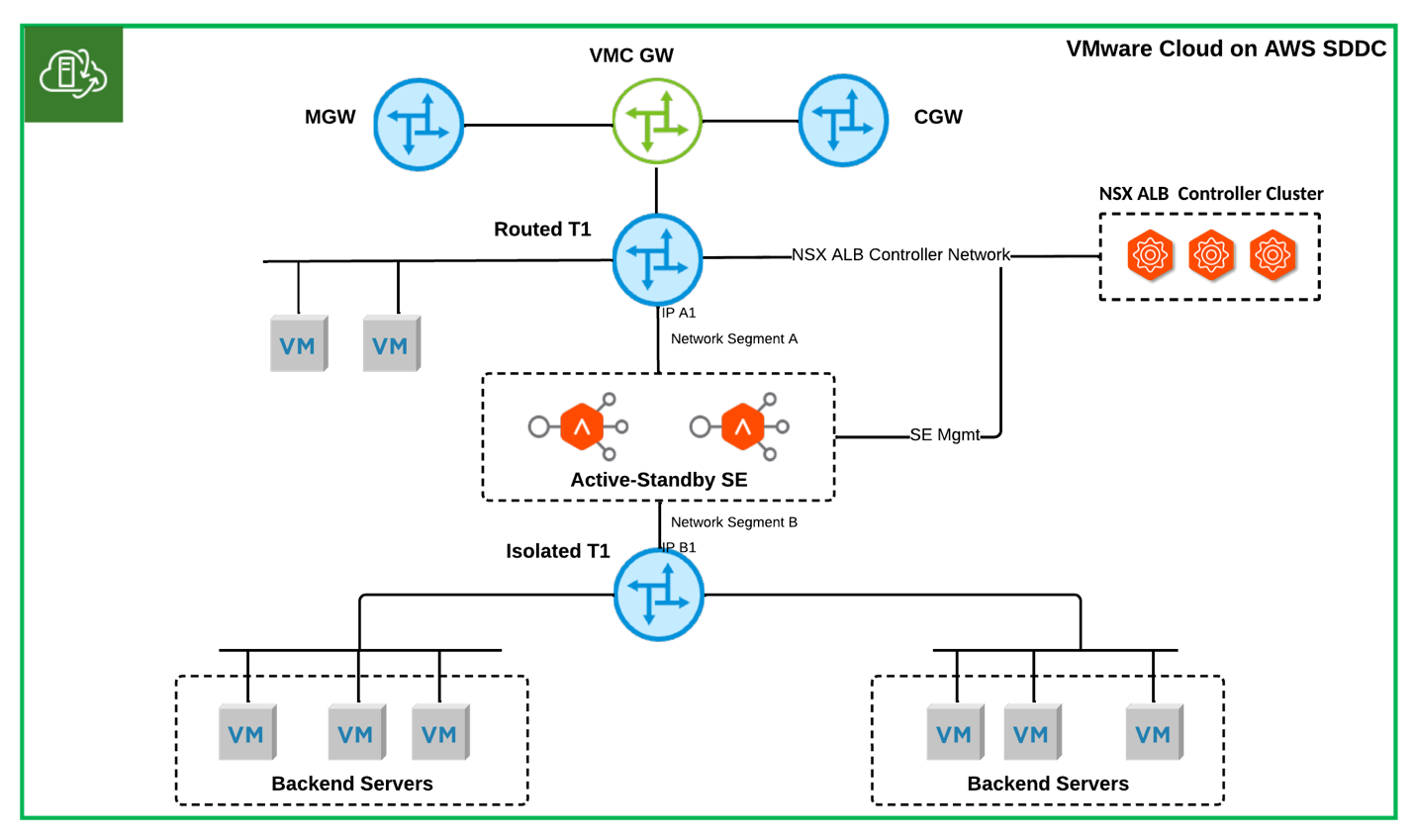

Figure 1 - NSX ALB Inline Topology on VMware on AWS

NSX ALB Controller Cluster

When deploying the NSX ALB controller cluster, the major consideration is to meet its communication requirements. The NSX ALB controllers must be able to communicate with various systems, e.g. NSX ALB SEs, DNS, NTP, and Syslog. These systems could be located within the same SDDC, in different SDDCs, or on-premises. Also, deploying the inline and one-arm topology within the same VMware Cloud on AWS SDDC is commonly used. To simplify the connectivity configuration of the deployment, it's recommended to place the NSX ALB controller in a network segment connected to a routed T1 or the default compute gateway (CGW). Since the NSX ALB controllers and all SEs are within the same SDDCs, the network latency requirement between controllers (< 10ms) and between controllers and SEs (< 70ms) can be met even in a multi-AZ VMware Cloud on AWS SDDC. Figure 1 illustrates an example deployment where the controller cluster is connected to a routed T1 router.

In a multi-AZ VMware Cloud on AWS SDDC, it's common practice to deploy all controllers in one Availability Zone (AZ). Distributing three controllers across two AZs is not required for the following reasons:

- When all three controllers are deployed in one AZ, such as AZ1, active SEs in AZ2 will continue to forward traffic for the configured virtual services in case AZ1 fails. Once vSphere HA boots up all AZ1 controllers in AZ2, the controller cluster will become operational. Distributing three controllers across two AZs service provides minimal additional resilience to the control plane service, and no enhancement on the load balancing service's availability.

- Lower network latency between controllers is preferred, and deploying all three controllers in one AZ guarantees the lowest possible latency.

NSX ALB SE Group

The supported high-availability (HA) mode for inline SEs is limited to Active-Standby. Within an inline SE group, each SE will connect its two data vNICs to VMware Cloud on AWS NSX custom T1 routers: one vNIC connected to a routed T1 and the other vNIC connected to an isolated T1. Additionally, the management network of SE shares the same network segment as the controllers to minimize latency between SEs and controllers.

Cloud Type

The only supported NSX ALB cloud type config for VMware Cloud on AWS SDDC is No-Orchestrator.

Virtual IP (VIP) Allocation

As only the No-Orchestrator cloud is supported by VMware Cloud on AWS, load-balancing VIPs can be manually allocated or done via Avi Vantage IPAM and supported 3rd party IPAMs.

In the inline topology, VIP can be assigned from available IPs in SE directly connected data network segments, for example, network segments A or B (as shown in Figure 1), or from one or multiple dedicated VIP subnets.

Virtual Service Client

When deploying an inline topology on VMware Cloud on AWS SDDC, it is important to note that virtual service clients cannot be located in network segments connected to the same isolated T1 as inline SEs. This is because the isolated T1 will send the return traffic directly to clients, effectively bypassing the NSX ALB load balancer.

Network Connectivity

Proper network connectivity is essential for NSX ALB deployment. The following requirements need to be met for this purpose:

- Connectivity between virtual service clients and VIPs.

- Connectivity between backend servers and virtual service clients.

- Connectivity between external and backend servers.

To meet the above requirements, configure VMware Cloud on AWS NSX and NSX ALB as below:

- Configure NSX ALB to act as a router for all traffic that doesn't require load balancing by setting the following parameters in the network service configuration:

- service_type: routing_service

- enable_routing

- enable_vip_on_all_interfaces

- floating_intf_ip

Please refer to Network Service Configuration for implementation details.

- Add a default route on NSX ALB with the next hop as the interface IP A1 of the routed T1 in network segment A and static routes to backend servers’ subnets with the next hop as the interface IP B1 of the isolated T1 in network segment B as shown in Figure1.

It's important to note that when both one-arm and inline topology are used in the same VMware Cloud on AWS SDDC, a separate VRF context on NSX ALB is required for the inline deployment. This is because the SEs in the two topologies have different network connectivity requirements and separate VRFs will define different routing table instances for inline SEs and one-arm SEs.

- Add a default route on the isolated T1 with the next hop as the inline SE floating IP in network segment B.

- Add static routes on the routed T1 for VIP subnets and backend servers’ subnets with the next hop as the inline SE floating IP in network segment A.

- When AWS Direct Connect or VMware Transit Connect is used for connectivity from on-prem to VMware Cloud on AWS SDDC, configure VMware Cloud on AWS SDDC NSX route aggregation to advertise routes for backend servers and VIP subnets. Please refer to Aggregate and Filter Routes to Uplinks for implementation details. Additionally, if using a policy-based VPN, ensure that all VIP subnets and application subnets are included in the local networks when defining the VPN policy.

Service Resilience

To minimize service interruptions in the event of VMware Cloud on AWS host failures, it is highly recommended to define VM-VM Anti-Affinity compute policies to ensure that NSX ALB controllers and SEs are deployed across different physical nodes.

When load balancing an application distributed across two AZs in a multi-AZ VMware Cloud on AWS SDDC, it is recommended to deploy two SEs of an inline SE group to different AZs. This ensures that at least one SE is available in case of an AZ failure, which helps minimize load balancing service and connectivity interruption. In the event of an AZ failure causing the NSX ALB control plane service to go down, standby SEs in the running AZ will become active to provide load balancing services once the NSX ALB control plane service is restored, without waiting for the original active SE to come back. It's important to note that if the NSX Edge appliances are affected by an AZ failure, end-to-end connectivity for load balancing will not be restored until the NSX Edge appliances are back online.

Load Balancing Offload

In an inline topology, all traffic from backend servers is directed to the inline SEs when servers only have a single vNIC. This may not always be the desired behavior, especially in the case of data backup. To reduce the load on the SEs, a second vNIC can be added to backend servers, and static routes can be configured to use this 2nd vNIC to reach destinations.

Service Monitoring

NSX ALB service monitoring is required for NSX ALB controllers, SEs and relevant components such as NSX Edge appliances and physical ESXi hosts on which SEs run. In an inline topology, the same traffic will traverse the active NSX Edge appliance multiple times, which can lead to an increased load on the appliance. In cloud environments like VMware Cloud on AWS, limitations are more commonly based on packets-per-second (PPS), closely monitoring this metric for NSX Edge appliances and physical ESXi hosts’ elastic network adapters in high-load environments is recommended. This will help to ensure that the NSX Edge and ESXi hosts are not overwhelmed by traffic and that optimal performance is maintained. For further information about VMware Cloud on AWS network performance, please check Designlet: Understanding VMware Cloud on AWS Network Performance.