A Brief Introduction to NSX Advanced Load Balancer Integration With VMware Cloud on AWS

Introduction

- Multi-cloud: Consistent experience across deployment of on-premises and cloud environments through central management and orchestration.

- Intelligence: Built-in analytics drive actionable insights that make autoscaling seamless, automation intelligent, and decision making easy.

- Automation: 100% REST APIs enable self-service provisioning and integration into the CI/CD pipeline for application delivery.

NSX ALB Use Cases

The key features driving the customers towards NSX ALB adoption are:

- Load Balancer refresh.

- Multi-Cloud initiatives.

- Security including WAF, DDoS attack mitigation, achieve compliance (GDPR, PCI, HIPAA).

- Container ingress (integrates via REST APIs with K8s ecosystems like GKE, OpenShift, EKS, AKS, TKG).

Architecture and Components of NSX ALB

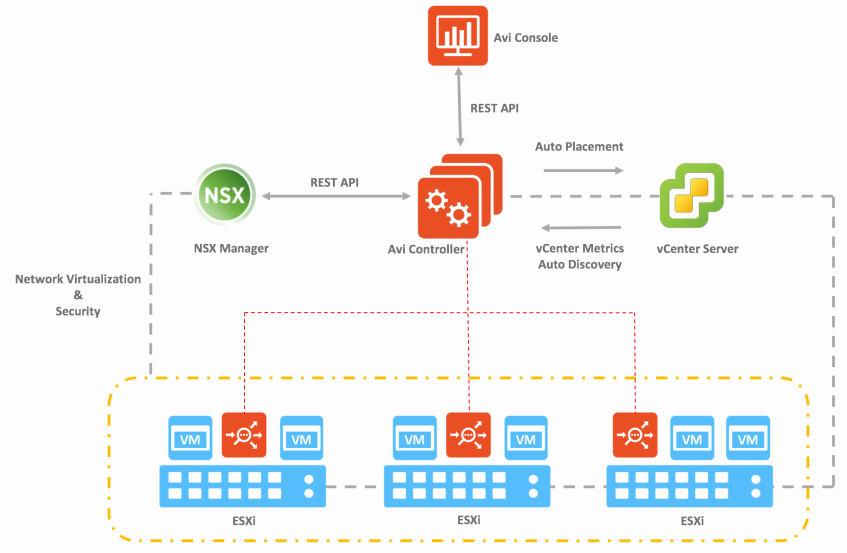

The diagram below shows the high-level architecture of NSX ALB.

Figure 1 - NSX ALB Architecture

As shown in Fig 1, the NSX ALB controller acts as an entry point for UI/API operations for services and management. The controller interacts with the vCenter Server and NSX-T in an SDDC via API for auto-discovery of SDDC objects like ESXi Hosts, Network port groups, etc. The Service Engines are placed on the ESXi host and perform the L4/L7 load balancing for the applications deployed in the SDDC.

NSX ALB consists of two main components:

- NSX ALB Controller: NSX ALB Controller is the central repository for the configuration and policies and can be deployed in both on-prem environments or in the cloud. NSX ALB Controller is deployed in VM form factor and can be managed using its web interface, CLI, or REST API.

- Service Engines (SE): The Service Engines (SEs) are lightweight data plane engines that handle all data plane operations by receiving and executing instructions from the controller.

The controller handles the following tasks:

- All platform related configuration is done on controllers.

- Manage and store all policies related to services and management.

- Responsible for deploying Service Engines.

- Manage the placement of virtual services on SEs to load balance new applications or scale-up capacity of current applications.

- Facilitates UI console to perform the configuration and management.

- Host API services and the management plane cluster daemons.

The responsibilities of Service Engines are:

- Perform load balancing and all client and server-facing network interactions.

- Collect real-time application telemetry from application traffic flows.

- Execute data plane application delivery controls operations, such as health monitoring and test the performance of the back-end servers.

- Protect against security threats (DoS, suspicious client IPs).

Why you should choose NSX ALB

Traditional hardware load balancers have the following limitations:

- No Auto Scaling when load balancer runs out of capacity for the virtual service placement

- No Self-healing in a failure scenario

- Manual Virtual Service placement

- Complex upgrade procedure

- Compatibility with various platforms/cloud infrastructure.

NSX ALB is a 100% software-defined solution designed to address the above challenges.

NSX ALB Use Cases in VMware Cloud on AWS

- Load balancing of application inside an SDDC.

- Global load balancing across 2 or more SDDCs in VMware Cloud on AWS or between on-prem environment and an SDDC running in VMware Cloud.

- Integrates with Tanzu Kubernetes Cluster (TKG) to provide load balancing functionality for the Kubernetes workloads.

- Utilize NSX ALB in a hybrid model to provide load balancing of applications stretched between on-prem datacenter and SDDC in VMC.

Deployment Considerations

- Deploy NSX ALB controllers in No-Orchestrator mode in VMware Cloud on AWS

- NSX ALB Management and VIP CIDRs should be non-overlapping.

- The logical segment selected for the VIP assignment should have DHCP enabled. If DHCP is not used, IP Pools must be defined manually in NSX ALB for the VIP network.

- For better control over the placement and resources utilization, make use of resource pools for the Service Engines deployment.

- Since Controllers are deployed in No-Orchestrator mode, the network auto-discovery is not performed and the VIP network has to be defined manually.

- Plan for the Service Engine sizing in advance before the deployment. Please refer to the NSX ALB official documentation for Sizing Service Engines.

- It is highly recommended to add the Service Engine's to the NSX-T Distributed Firewall exclusion list to prevent dropped flows for scaled-out VIPs.

- To provide load balancing for applications that are scattered across more than one logical segment, the general recommendation is to use a single Data Nic (VIP segment) on the SE and use l3 for backend connectivity to the pools.

NSX ALB Use Case Designs

Load Balancing of Application Inside an SDDC

As shown in figure 2, the above design will have the ability to perform load balancing for the applications that are deployed within the SDDC.

In this design:

- The NSX ALB components (Controllers and Service Engines) are deployed on the logical segments created on the CGW. You can choose to call this logical segment as NSX ALB management network.

- The applications to be load-balanced are spread across one or more logical segments.

- Service Engine VM's are also connected to the application network directly for the L2 connectivity with the applications.

- Service Engine is also connected to the VIP network where IPAM is configured with an IP pool. When a virtual service creation request is received by Service Engine, an IP address from the VIP pool is assigned to the Virtual Service.

- Client connections coming to SDDC from outside, land on the CGW, and depending upon the NAT and firewall rules configured, traffic is then sent to the VIP of the application. The Service Engine internally routes the traffic to the applications and provides a response to the client connection

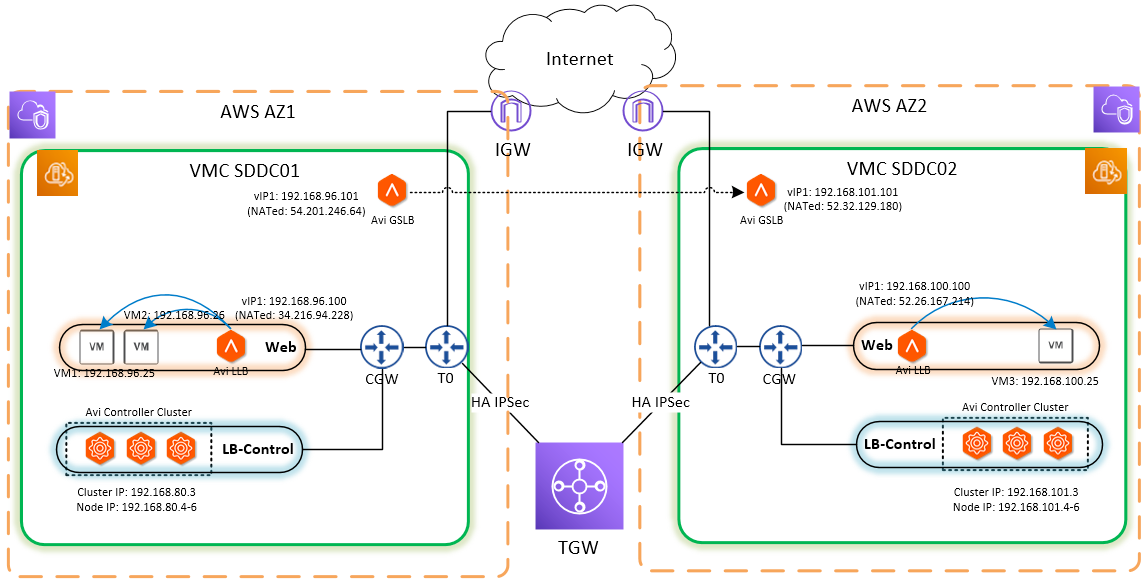

Global Load Balancing Across Two or More SDDCs in VMware Cloud on AWS

As shown in figure 3, NSX ALB components are deployed per SDDC and interact with each other to leverage the Global Server Load Balancing functionality of NSX ALB.

In this design:

- SDDC's can be in a single AZ or multiple AZ.

- Connectivity between the SDDC's can be established over IPSec or via VMware Transit Gateway.

- The controllers need connectivity to the local SEs and the remote Controller.

- Applications deployed across the SDDC should have reachability with each other and the service engine instances.

- The corporate DNS should be sub-delegating the domain to NSX ALB for performing DNS bases load balancing.

For instructions on how to setup GSLB in VMC, please refer to this guide.

NSX Advanced Load Balancer for Tanzu Kubernetes Grid in VMware Cloud on AWS

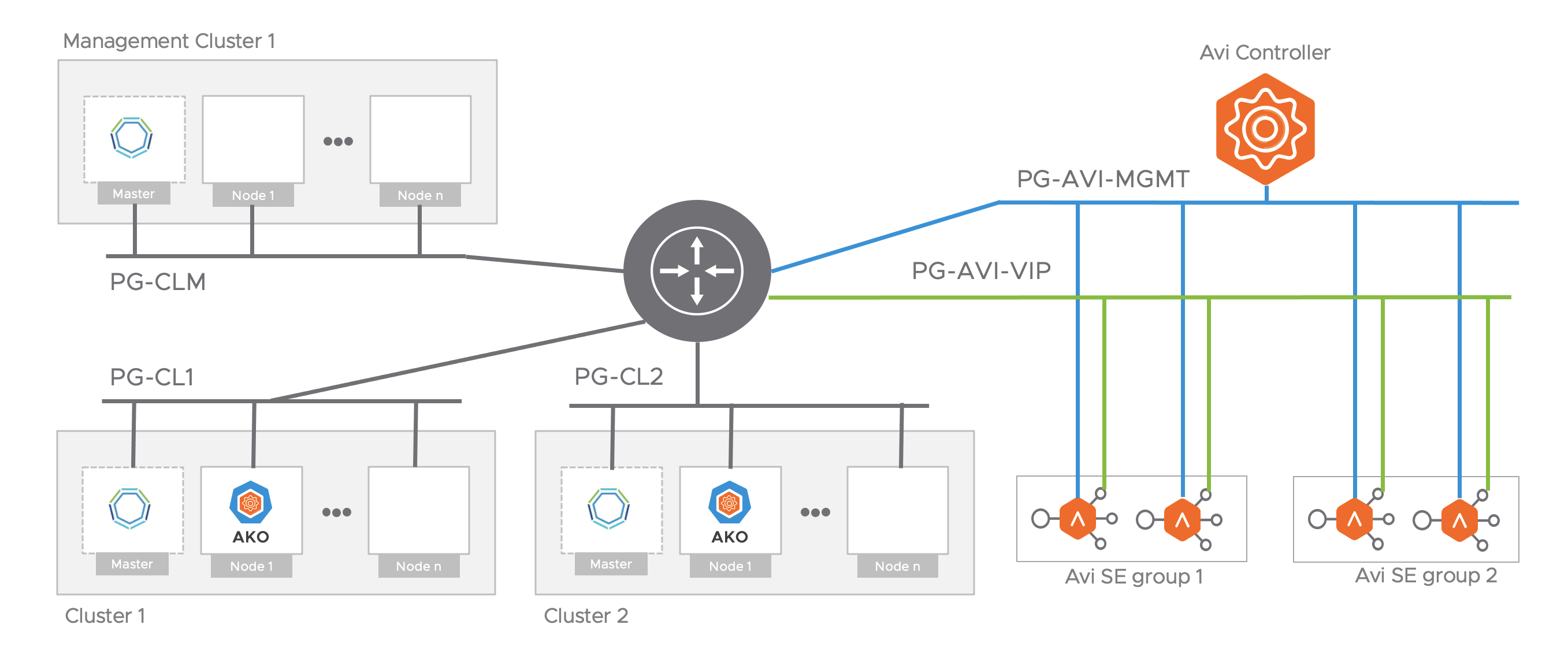

Fig 4 - NSX ALB network topology for TKG

Figure 4 explains the network topology of the NSX ALB for the Tanzu Kubernetes Grid.

In this design:

- Tanzu Kubernetes Grid management cluster is deployed on a dedicated logical segment (TKG Management)

- One or more TKG workload clusters are deployed on a dedicated logical segment.

- The Service Engine VM's establishes network connectivity with the TKG workload cluster via static routes.

- Each workload cluster is treated as a tenant and has its dedicated Service Engine group and Service Engine VM's.

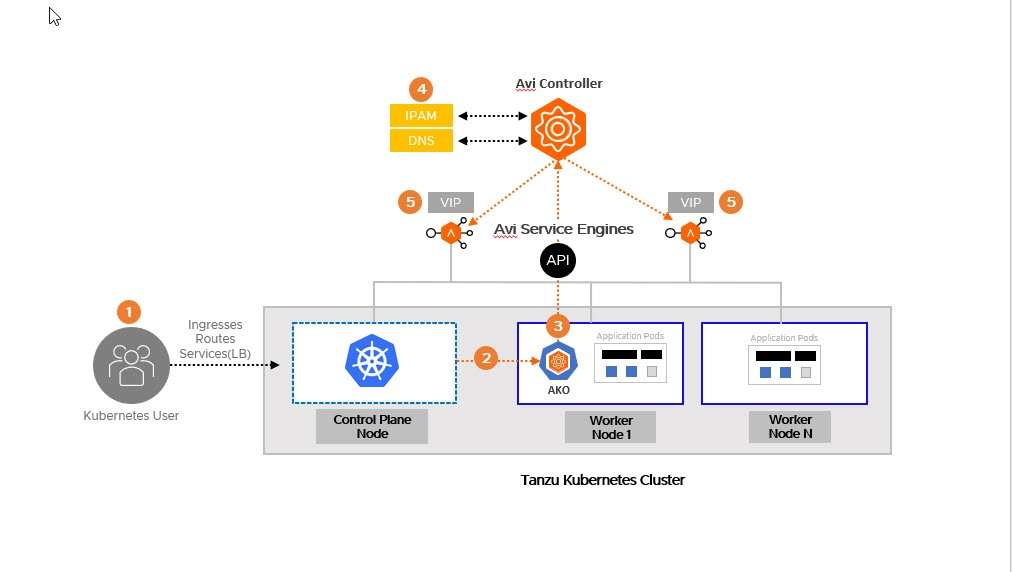

- Load balancing for K8 workload is provided via the Avi Kubernetes Operator component. Each TKG workload cluster has an instance of AKO running. The network connectivity of AKO with NSX ALB components is shown in the below diagram.

Fig 5, AKO interaction with NSX ALB

Application Migration Between On-Prem and VMConAWS

Fig 6, NSX ALB Hybrid Deployment for Application Migration

This design is commonly used for migrating applications from an On-Prem Datacenter to a VMC on AWS SDDC. The placement of NSX ALB controllers and Service Engines (SEs) depends on the end goal. For example, if the goal is to migrate everything from On-Prem to a VMC SDDC to retire the On-Prem datacenter, Controllers and SEs can be deployed directly in VMC. Load balancing of applications in the on-prem environment is provided via the local SE instances deployed in On-Prem.

Once the applications are migrated to the VMC SDDC, the associated Virtual Services can be migrated without impact between Service Engines hosted within the VMC SDDC and the On-Prem datacenter. Once the Virtual Service is migrated, the On-Prem SE instances can be discarded.

In this design:

- The application servers and the NSX ALB components are placed on the L2 extended network.

- Connectivity between NSX ALB and Service Engines is over L3.

- The latency between Controllers must be <10ms.

- Latency between Controller and Service Engines is recommended to be <80ms.

Design Considerations

- When setting up NSX ALB in VMC, deploy 3 controller nodes for achieving High Availability.

- Ensure that firewall rules are in place for a successful deployment of NSX ALB. To see full list of Ports/Protocols that needs to be allowed in firewall, please see this guide

- If the number of TKG workload cluster is more than one, it is recommended to run them on a dedicated logical segment.

It is recommended to have a dedicated Service Engine Group & Service Engine VM's per TKG workload cluster.

Service Engines are shared among virtual services, CPU/Memory reservations can be configured on the service engine VMs for guaranteed performance.

- If Public IPs for NSX ALB are used, then it incurs charges. To know more about the charges, see the VMware Cloud on AWS Pricing Guide.

Caveats

- For Service Engines deployed in N+M HA mode, the VS placement is by default compact. In this case, a new set of service engines will not be spun up until the existing ones have exhausted capacity for VS placement.

- If the SDDC is over-provisioned, and reservations are used on Service Engine VM's, you may encounter issues in powering on the SEs in a failure scenario.

- Service Engine connectivity with application networks over layer-2 is supported when the number of application networks is less than eight. For larger deployments, SE connectivity with the application networks has to be over L3.

- When the leader node (controller) goes down, one of the follower nodes is promoted as a leader. This triggers a warm restart of the processes among the remaining nodes in the NSX ALB Controller cluster. During warm restart, the NSX ALB Controller REST API is unavailable for a period of 2-3 minutes.