Designlet: VMware Transit Connect for VMware Cloud on AWS

Introduction

VMware Transit Connect provides a high performance, scalable and flexible network connectivity solution for VMware Cloud on AWS SDDCs. It provides connectivity between multiple SDDCs, as well as between SDDCs and Native AWS Transit Gateways (TGWs), VPCs, and Direct Connect Gateway (DXGW) as a path to On-Premises networks, within the same Region or across multiple Regions. SDDC Groups can also be connected together.

Scope of the Document

This document will describe the considerations and design options when implementing VMware Transit Connect (also called SDDC Groups or vTGW) for connectivity to VMware Cloud on AWS SDDCs, and how that can impact other connectivity that may be in place.

Glossary

VMware Transit Connect: A VMware-managed service that enables network connectivity between VMware Cloud on AWS SDDCs in one or more Regions, as well as with native AWS networks. It is based on the AWS Transit Gateway.

vTGW: An abbreviation that references the VMware-managed AWS Transit Gateway used by VMware Transit Connect. It is often used to refer to the VMware Transit Connect service.

SDDC Group: A grouping of SDDCs and external AWS attachments within the VMware Transit Connect service. Multiple SDDC Groups are supported within an Org, but a given SDDC can only be a member of one SDDC Group at a time.

TGW: AWS Transit Gateway connects Amazon Virtual Private Clouds (VPCs) and on-premises networks through a central hub. AWS Transit Gateway acts as a highly scalable cloud router.

VPC: Amazon Virtual Private Cloud is a service that lets you launch AWS resources in a logically isolated virtual network that you define. An Amazon VPC is scoped to a Region and can contain multiple subnets that are scoped to an Availability Zone (AZ).

DXGW: AWS Direct Connect Gateway is a globally available resources that can associate Direct Connect with an AWS Transit Gateway or a virtual private gateway, in the same or any remote Region from where the Direct Connect circuit is provisioned.

SDDC: Software-Defined Data Center. This term refers to a vSphere virtual datacenter, managed by a single vCenter and NSX Manager. For the purposes of this document, an SDDC refers to a VMware Cloud on AWS SDDC.

CGW: Compute Gateway. This refers to the default Compute Gateway router in a VMware Cloud on AWS SDDC. It has a firewall that protects all uplinks, which traffic from segments connected to both the default CGW and any Custom Tier-1 routes passes through.

Managed Prefix-List: A set of CIDR blocks that can be managed by one AWS account and shared with another. This list can be used in route tables and security groups to simplify operations.

Summary and Considerations

| Use Case | Providing direct, native connectivity between multiple VMware Cloud on AWS SDDCs, connectivity to native AWS services |

| Pre-requisites | • All SDDC Group members must have non-overlapping management subnets. • Ideally all SDDC Group members should have all networks be non-overlapping, as only one route will be selected as active in a non-deterministic manner. • Access to AWS accounts for attaching external connectivity (VPC, TGW, DXGW) and accepting shared prefix-lists & updating route tables. |

| General Considerations/Recommendations | SDDC Group members will prefer the SDDC Group connection between themselves over most other paths that are configured. Therefore, separate groups may be required if inter-SDDC traffic must be inspected or sent over a specific path. Multi-Region connectivity can become complex when combining SDDCs in multiple Regions with AWS external connections as each Region requires a separate attachment. It may be operationally simpler to maintain a single Region per SDDC Group model, and leverage SDDC Group-to-Group connections when direct SDDC connectivity is required between groups. |

| Performance Considerations | AWS TGWs are rated at 100Gbps per attachment, or 7.5Mpps. Maximum MTU of 8500 bytes (packets received above 8500 bytes are dropped. No support for PMTUD, but MSS clamping is enforced. |

| Network Considerations/Recommendations | Always ensure traffic paths are symmetrical – this requires aligning the AWS route tables associated with subnets containing resources or services communicating with SDDCs to send traffic back to the SDDC’s network using the same path the SDDC will select for that traffic. Firewalling can be done at multiple locations, and all must permit the traffic for communication to succeed: • Distributed Firewall (DFW) • Custom Tier-1 Gateway Firewall (if segment is attached to a customer Tier-1 gateway) • Default Compute Gateway Firewall (this applies to segments attached to the default compute gateway as well as those attached to customer Tier-1 gateways) • AWS Security Group(s) associated with the external connection (VPC, DXGW, Native AWS TGW) • AWS Network ACL for the VPC • AWS Security Group(s) associated with services or instances communicating with the SDDC Leverage managed prefix-lists wherever possible to simplify operations. These prefix-lists can be used in both route-tables and security groups. Avoid attaching the Connected VPC to the SDDC Group. When it is attached to a TGW connected to the SDDC Group, use a less-specific summary route containing the VPC’s CIDR for the TGW to minimize the chance of asymmetrical routing paths. When using a DXGW, traffic cannot hairpin through a single DXGW. A TGW or security VPC model should be used for any connectivity that remains within AWS. If this is not possible, then the destinations will need to be advertised over different DXGWs which will enable traffic to transit through an On-Prem network when the destination is advertised through a different DXGW. |

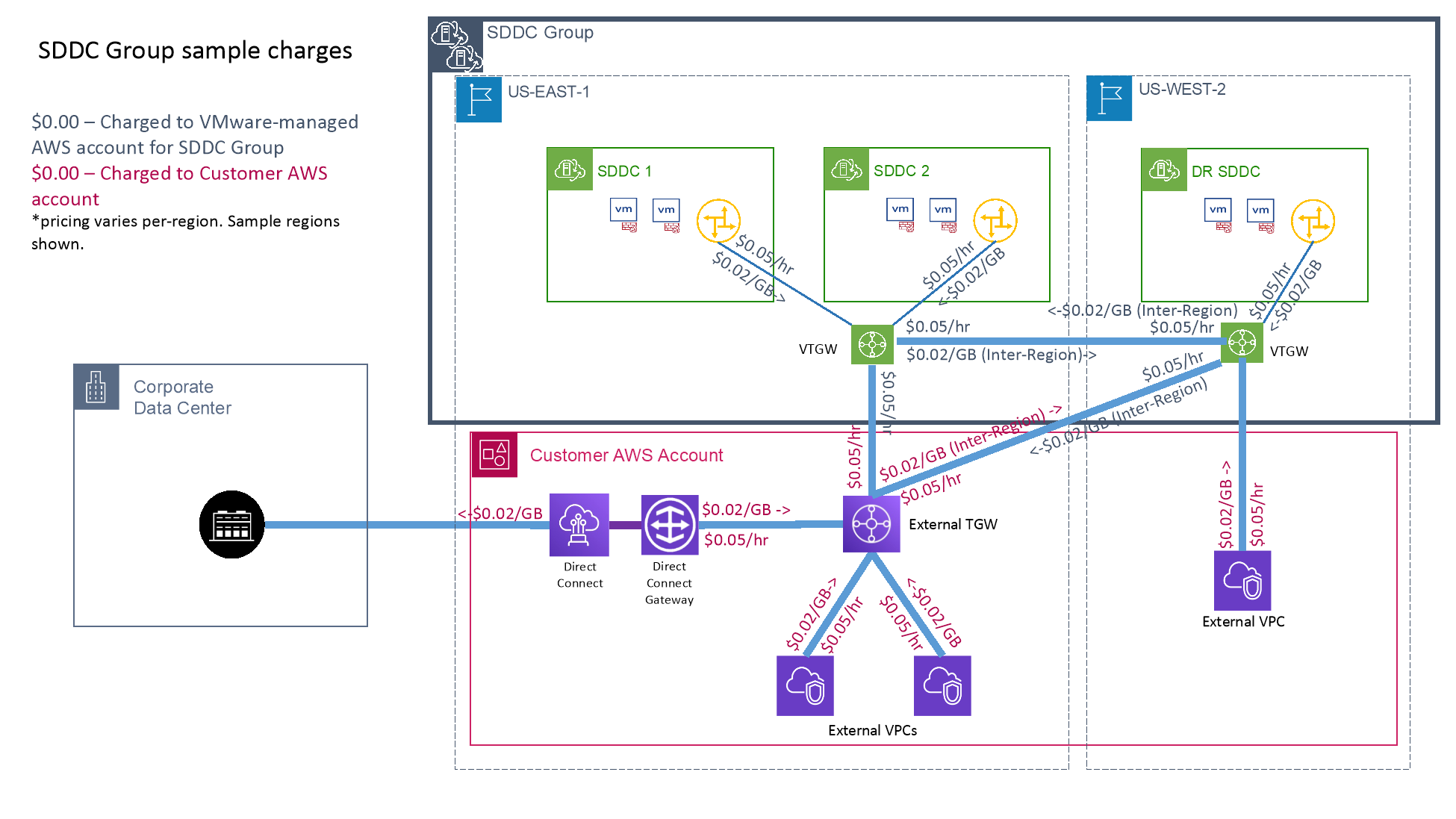

| Cost Implications | There are 2 costs associated with Transit Connect. There is an hourly charge per attachment. Each SDDC consists of 1 attachment, and every external connection (VPC, TGW, external VPC) also adds 1 attachment (with TGW peering attachments, each TGW is charged for its side of the attachment). When an SDDC group spans multiple Regions, there will be 2 additional attachment charges per region pair. The second charge is for data processing. Within the same Region, traffic is charged at $0.02/GB for most Regions. Traffic is charged only once as it transits the TGW. When connecting to another TGW, the traffic is still only charged once. When traffic is transiting to or from a different Region, there is an additional inter-Region data transfer charge. Data transfer charges are always billed to the account where the traffic originated. VMware passes TGW charges associated with Transit Connect on to the customer without any change from the AWS rates. |

| Document Reference | Creating and Managing SDDC Groups Managed Prefix-List for Connected VPC |

| Last Updated | October 2023 |

Background

Cost Planning

There are a few basic principles that will provide a better understanding of how charges for VMware Transit Connect are determined:

- Billing is based on the AWS charges for Transit Connect Gateway attachments, which are billed on an hourly basis, and the AWS data processing charges for traffic that flows through the TGW.

- Data processing charges are always billed to the account where the traffic originates.

- For charges billed to the VMware-managed account for the SDDC group, VMware bills them to the end customer without any additional uplift. Charges are billed in arrears for the month.

- When traffic passed through more than one TGW through a Peering Connection, only the first TGW is charged for data processing.

- Traffic transiting to a different region will incur inter-region charges in addition to data processing charges.

Route / Path control & priority

VMware Transit Connect does not use any dynamic routing protocols. However, most of the routing is performed through automation, simplifying management and operations. We’ll break down the routing controls and level of automation for each of the different types of connections supported by an SDDC Group:

SDDCs

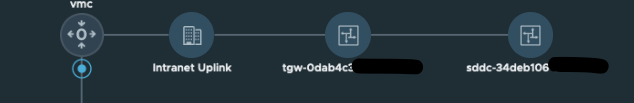

SDDCs share an uplink for DX Private VIF and Transit Connect called the INTRANET uplink (also called the Direct Connect Interface on the Compute Gateway Firewall). This means that any routes advertised over one will also be advertised to the other. There is one difference in how the SDDC’s management network is advertised – with DX Private VIF, the management CIDR is broken down into 3 subnets, however it is advertised as a single CIDR to the SDDC Group.

By default, all routed segments attached to the default CGW are advertised to the SDDC Group. However, this can be managed by defining route aggregation(s) for the INTRANET Connectivity Endpoint, as well as by enabling the Egress Filtering option on the Intranet Uplink, which will disable the default advertisement of routed segments attached to the default CGW, and only advertise defined route aggregations applied to the INTRANET Connectivity Endpoint. The management subnet(s) are always advertised to the DX private VIF and SDDC Groups attached the SDDC. Segments connected to custom Tier-1 routers are not advertised to the DX private VIF or SDDC Group; Route Aggregation(s) must be defined on the INTRANET Connectivity Endpoint in order to advertise any networks associated with Custom Tier-1 routers.

AWS VPC

When an AWS VPC in a customer-owned AWS account is attached to the SDDC Group, the VPC’s primary and any secondary CIDRs are automatically detected and added to the SDDC Group’s route tables. It is possible to configure additional manual routes pointing to the VPC through SDDC Group configuration. This would be to enable services that use non-VPC IP addresses or for a security/transit VPC model where a device in the VPC performs routing. Note that VPC routers do not natively support transitive routing, so only traffic going to a device or service within the VPC can be sent over this path without the use of a separate router.

To ensure traffic returns to the SDDC in a symmetrical path, the AWS route table associated with any subnet communicating with the SDDC will need to be updated with the SDDC networks. The easiest way to do this is to create a prefix list from the SDDC Group’s routing tab and associate it with your AWS account(s). That Prefix-list can be added to any route table, and it will be automatically updated with the routes advertised by SDDC Group members. Only routes learned from SDDCs will be added to this prefix list. It is also possible to manually add routes to the AWS route tables, but those must be maintained if the SDDC members or their networks change.

AWS DXGW

Connections between SDDC Groups and AWS Direct Connect are only supported when using a Direct Connect Gateway (DXGW) with a Transit VIF (TVIF). This connectivity relies on a combination of dynamic BGP route exchange between the DXGW and customer on-prem routers, and static routes between the SDDC Group and the DXGW. The routes advertised by DXGW to on-prem are configured on the DXGW by the customer and are not dynamically updated by a prefix-list or API. These routes can (and should) use summarizations, as there is a limit on the number of routes advertised over the BGP session. Advertising more than the permitted number of routes will cause the BGP session to go into a down state.

Routes advertised from on-prem over BGP will be learned by the SDDC Group via automation, polling for updates every 5 minutes. Multiple Direct Connects can be associated to the same DXGW which will rely on BGP & BFD to determine the active path, which can be used for availability purposes. The 5-minute interval will only impact new or removed advertisements.

AWS TGW

Connections to a TGW in a customer-owned AWS account work similarly to VPC attachments. However, all routing must be manually configured on the SDDC Group, since there are no default CIDRs learned from a TGW. For the return path to the SDDC, there are generally two route tables that need to be updated in the case of a connection through the TGW: The AWS VPC subnet’s route table must have the SDDC network routes pointing to the customer-managed TGW, and the AWS TGW’s route table must have the SDDC network routes pointing to the SDDC Group (or vTGW)’s attachment. The prefix-list created from the SDDC Group can be used in both route tables to simplify operations.

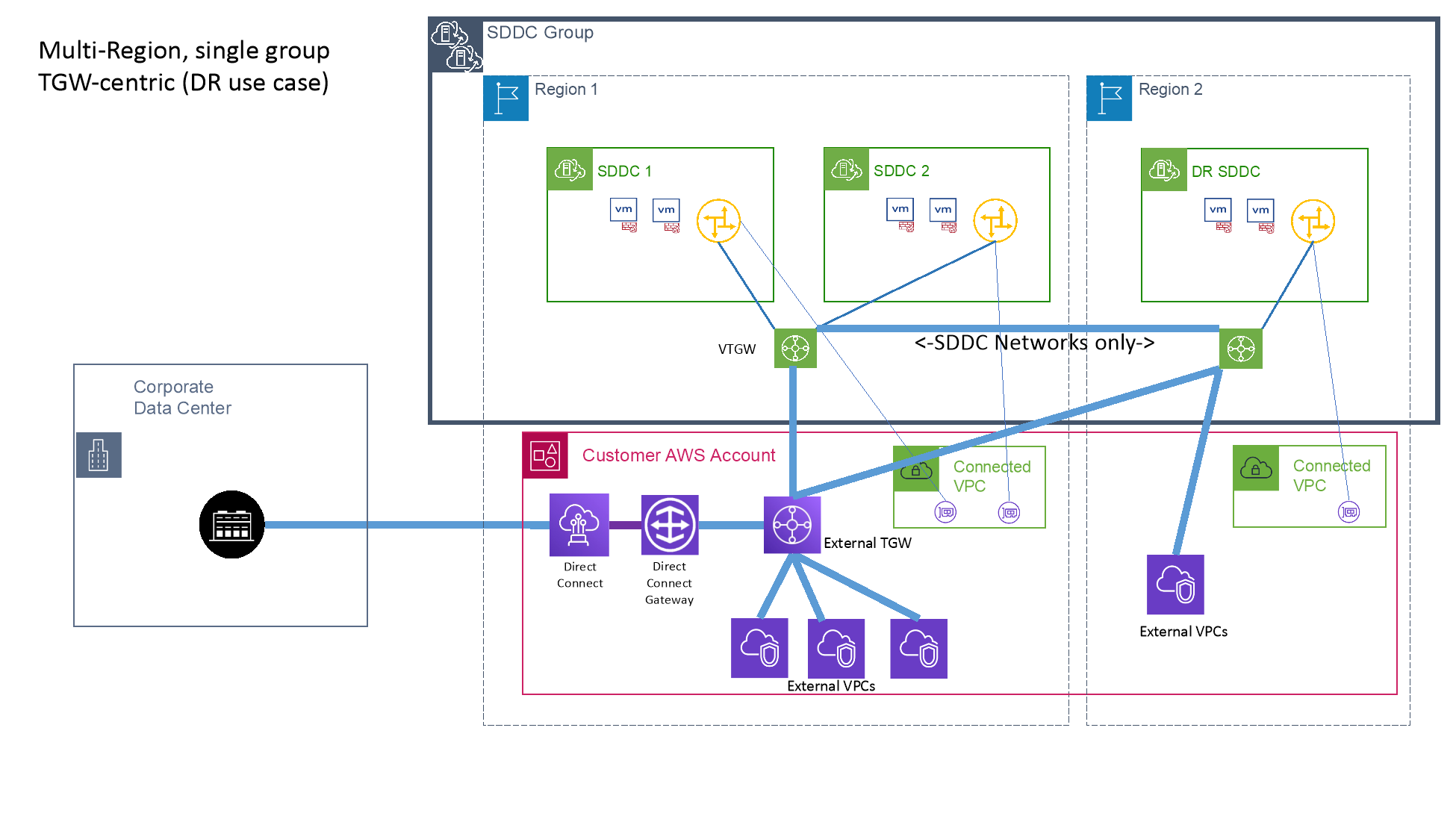

External SDDC Group (Group to Group)

SDDC Groups can be connected to enable full-mesh connectivity between the SDDC members of each group. Up to 3 groups can be connected in this manner, comprising of up to 3 unique Regions. Transit Connect automation will automatically update routes between each group & Region to enable this communication between the SDDCs, but communication to or from any external attachments is limited to SDDCs in their own group & Region. To enable connectivity between SDDCs in another group and external attachments, the external attachment must be connected to each SDDC Group & Region where that connectivity is required.

Relative Priority

This is considered from the perspective of workloads in the SDDC. Return paths will be dictated by the AWS route table(s) associated with the subnets and/or network services used by the AWS traffic.

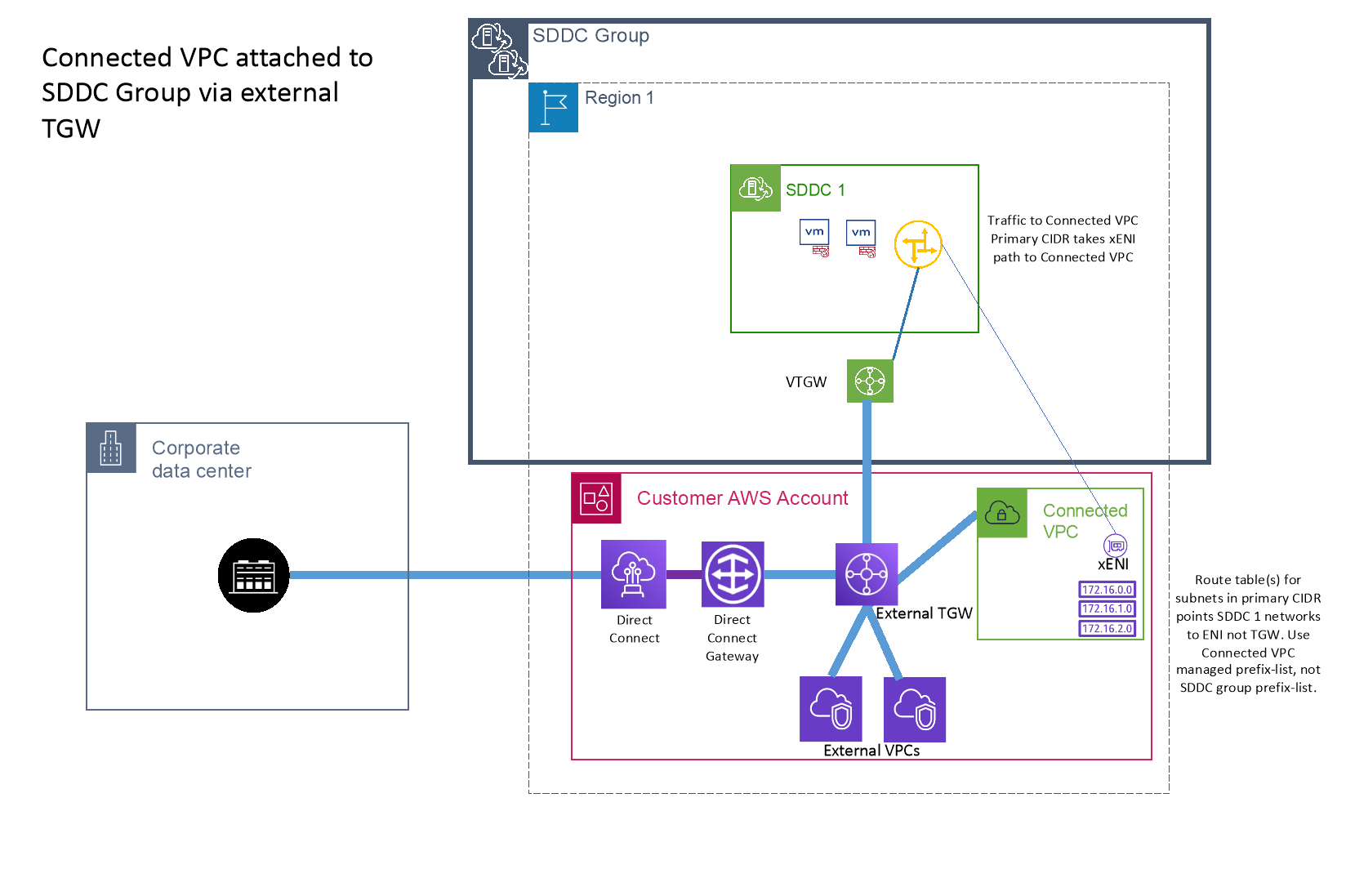

General routing principles apply: Shortest prefix will be preferred. This means that the xENI path will always be the preferred path for destinations in the Connected VPC’s primary CIDR.

If the same route is advertised over different connections, the SDDC will use the following priorities:

- Connected VPC

- Route-based VPN (Use VPN as backup to Direct Connect OFF)*

- Policy-based VPN*

- SDDC Group

- DX Private VIF

- Route-based VPN (Use VPN as Backup to Direct Connect ON)*^

*IPsec VPN will never be used for traffic from ESXi hosts if SDDC is a member of an SDDC Group or has a DX Private VIF attached.

^Use VPN as Backup to Direct Connect has no impact on SDDC Group priority. Route-based VPN will always perform as though this setting is OFF relative to SDDC Groups.

If the same route is advertised from multiple endpoints to the SDDC Group (e.g., if 2 SDDCs advertise the same network, or if there is an overlap between a VPC and SDDC network) then only one route will be learned by the SDDC Group, and it is done in a non-deterministic manner (the first route learned will be added, and subsequent ones will be ignored). Therefore, duplicate route advertisements to an SDDC Group should be avoided, as it cannot be relied upon for failover or path consistency.

Co-existence with the Connected VPC

While it’s possible to attach the Connected VPC either directly to the SDDC Group, or to a TGW that is attached to the SDDC Group, care must be taken to ensure traffic paths remain symmetrical. The primary CIDR of the Connected VPC will always prefer the xENI (Connected VPC) path from the SDDC. So any subnets in that primary CIDR should be associated with a route table that has the SDDC’s networks pointing to the active ENI (e.g. the main route table, or a route table using the Connected VPC managed prefix list). However, any subnets in secondary CIDRs should be associated with a route table that has the SDDC’s networks pointing to the SDDC Group’s vTGW attachment. This can become even more complex if multiple SDDCs are group members, and all use different Connected VPCs. In that case, it may not be possible to use the SDDC Group prefix list, but manually configure the routes specifically for each route table to ensure traffic takes the same return path as forward path. The simplest option is to avoid attaching the Connected VPC to the SDDC Group either directly or through a TGW.

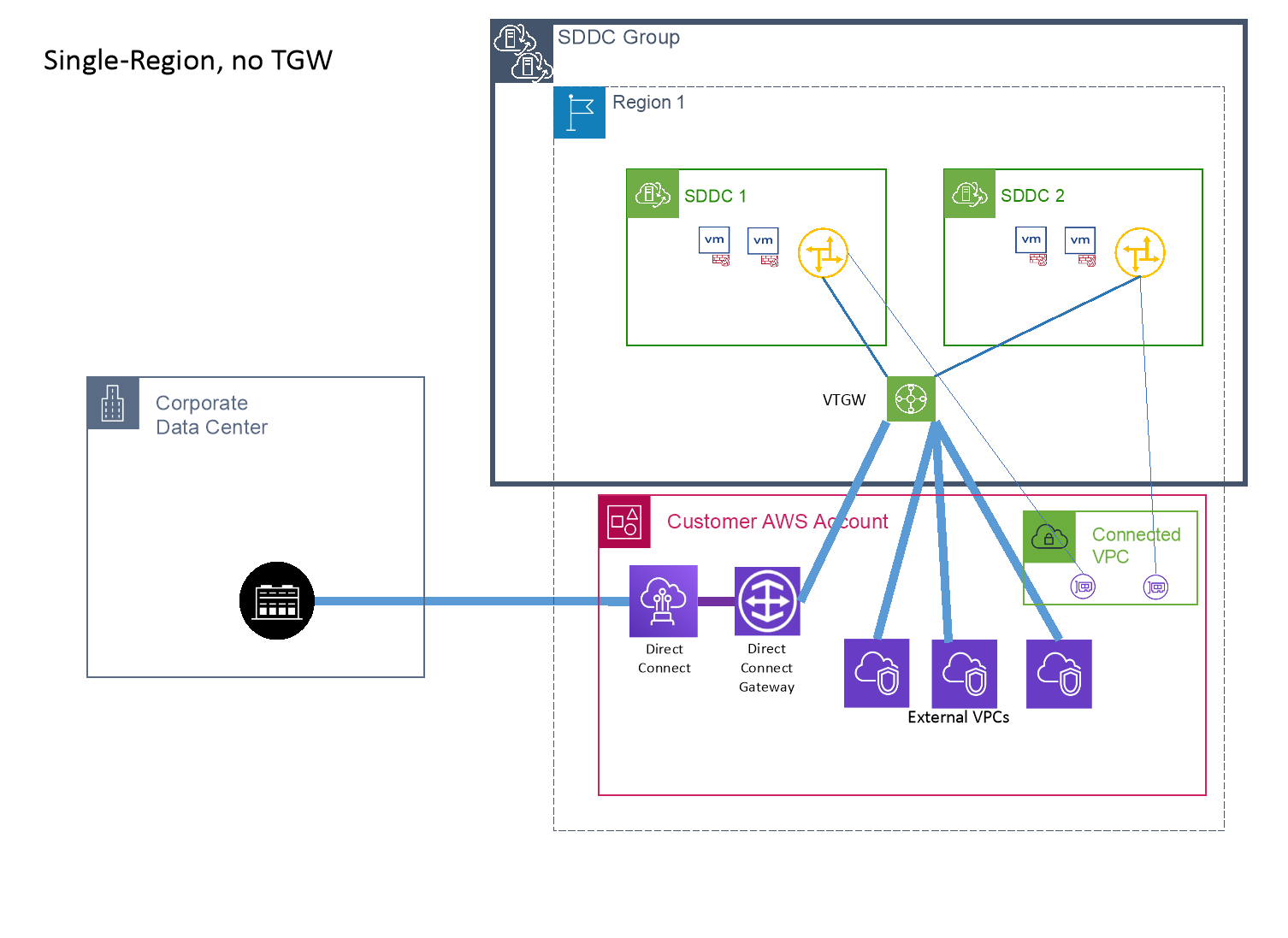

Multi-Region

When using SDDC Groups across multiple Regions, it’s important to understand that the AWS TGW is scoped to the Region. This means that any attachments to native AWS VPCs, DXGWs, or TGWs is done on a per-Region basis. If you want to connect the same VPC, DXGW or TGW to all Regions of an SDDC Group, you will need to attach it once per Region. A single attachment to an SDDC Group will only be reachable for SDDCs in the same Region as the attachment. Similarly, any prefix-lists created from an SDDC Group are scoped to a single Region, and an additional prefix-list will need to be created for each Region AWS attachments are being made from.

SDDCs that are in different Regions are automatically connected by the Transit Connect service. Even though the underlying connectivity is provided by multiple AWS TGWs, Transit Connect will create the necessary peering and routes to provide connectivity between SDDC Group members across all Regions.

Communication to ESXi hosts

As with a Direct Connect (private VIF) attached to an SDDC, when the SDDC is added to an SDDC Group, all communication to or from ESXi hosts’ management VMkernel interface (vmk0) - which includes vSphere replication traffic and VMware Remote Console (VMRC) - will always take the path over the SDDC Group or Direct Connect. There is no connectivity between ESXi hosts and traffic destinations within the SDDC or over IPsec VPN once it is an SDDC Group member and/or a DX private VIF is attached.

MTU

When using Transit Connect, the maximum MTU supported is 8500 bytes. AWS TGW does not support path MTU discovery (PMTUD), but will use MSS clamping to enforce a maximum TCP packet size of 8500 bytes.

If using HCX L2E over Transit Connect, a further 150 bytes should be deducted for HCX tunnel overhead, meaning endpoint VMs communicating over the L2E should have their MTU set at 8350 or lower, and the HCX Service Mesh uplink interface should be set at 8500 bytes for optimal performance.

HCX

Like the rest of the SDDC, HCX shares the same uplink for DX and Transit Connect. This means private IP networks defined on the directConnectNetwork1 uplink profile will be advertised over both DX and to the SDDC Group. These networks should not overlap with any other networks in the environment. For HCX service meshes between a VMC Cloud SDDC and on-prem, the on-prem uplink IP addresses should be reachable over the SDDC Group. This requires them to be added to the DXGW allowed prefixes in the SDDC Group (or configured on the DXGW itself.)

While HCX network extension and migration traffic cannot run over a VPN that terminates on the SDDC’s T0, it can go over a VPN within the path, such as when using an AWS VPN terminating on a TGW that is attached to the SDDC Group. In this case, it’s necessary to ensure that the end-to-end path meets HCX’s network underlay requirements.

Implementation

Design Choices

The primary design decisions when deploying SDDC Groups are around the grouping topology. This considers which SDDCs should communicate directly with each other, and what external attachments they need to reach. SDDCs with the same communication requirements can be put into the same group for simplicity.

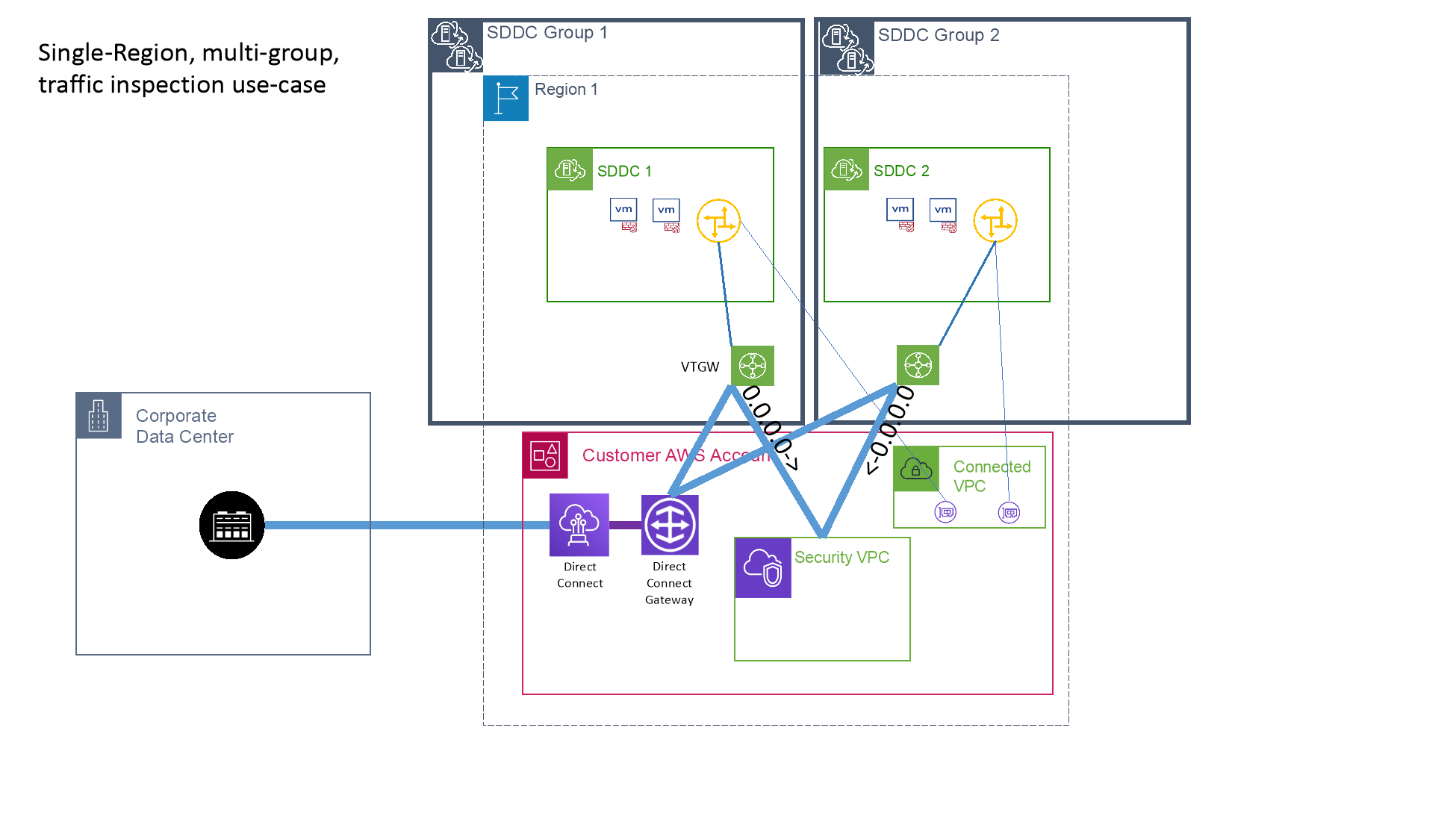

Reasons why this approach may not work include the desire to inspect traffic between SDDCs, or have it route through a specific path. In this scenario, the SDDCs will need to be in different groups so that traffic between them can be routed to a security VPC or back on-prem through a DXGW.

Additional considerations include whether the customer has an existing AWS Transit Gateway in place with all required connectivity set up, which they want to make use of for simplicity and standardization purposes. There may also be service quotas such as Direct Connect virtual interface maximums, route advertisement constraints (e.g. when they need to use subnets from a larger CIDR advertised to AWS that cannot easily be split out, etc. that may dictate all connectivity going through a native AWS TGW instead of directly attaching DXGW and VPCs to the vTGW.

Configuration

SDDC Groups are defined independently from SDDCs, however, an SDDC Group must have at least 1 SDDC member. SDDC Groups are defined in the VMC console on the SDDC Group tab under Inventory.

Attaching external AWS connections to an SDDC Group is a multi-step process:

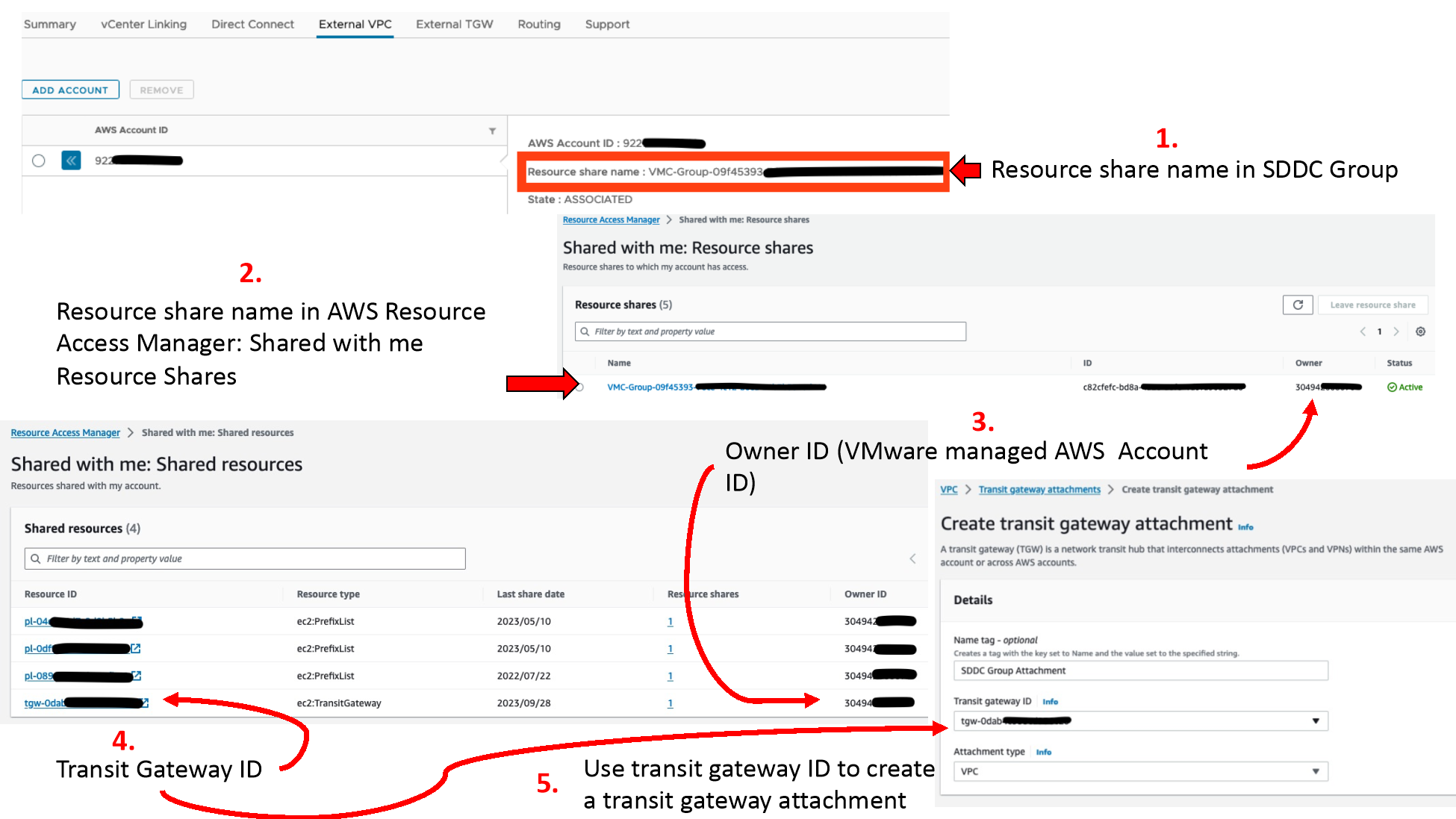

- For attaching VPCs, the AWS account that owns the external connection must be added to the SDDC Group. This will create a resource share of the VMware managed TGW (vTGW) used for the SDDC Group. The share request must be accepted in the AWS account’s Resource Access Manager. Once the vTGW is shared with the AWS account, VPCs are attached to this TGW through the TGW attachments section of the AWS account. This creates a request which must be accepted from the SDDC Group External VPC page.

- TGW and DXGW attachments are made through the SDDC Group and must then be accepted in the AWS Account’s TGW Attachments or Direct Connect Gateways page respectively.

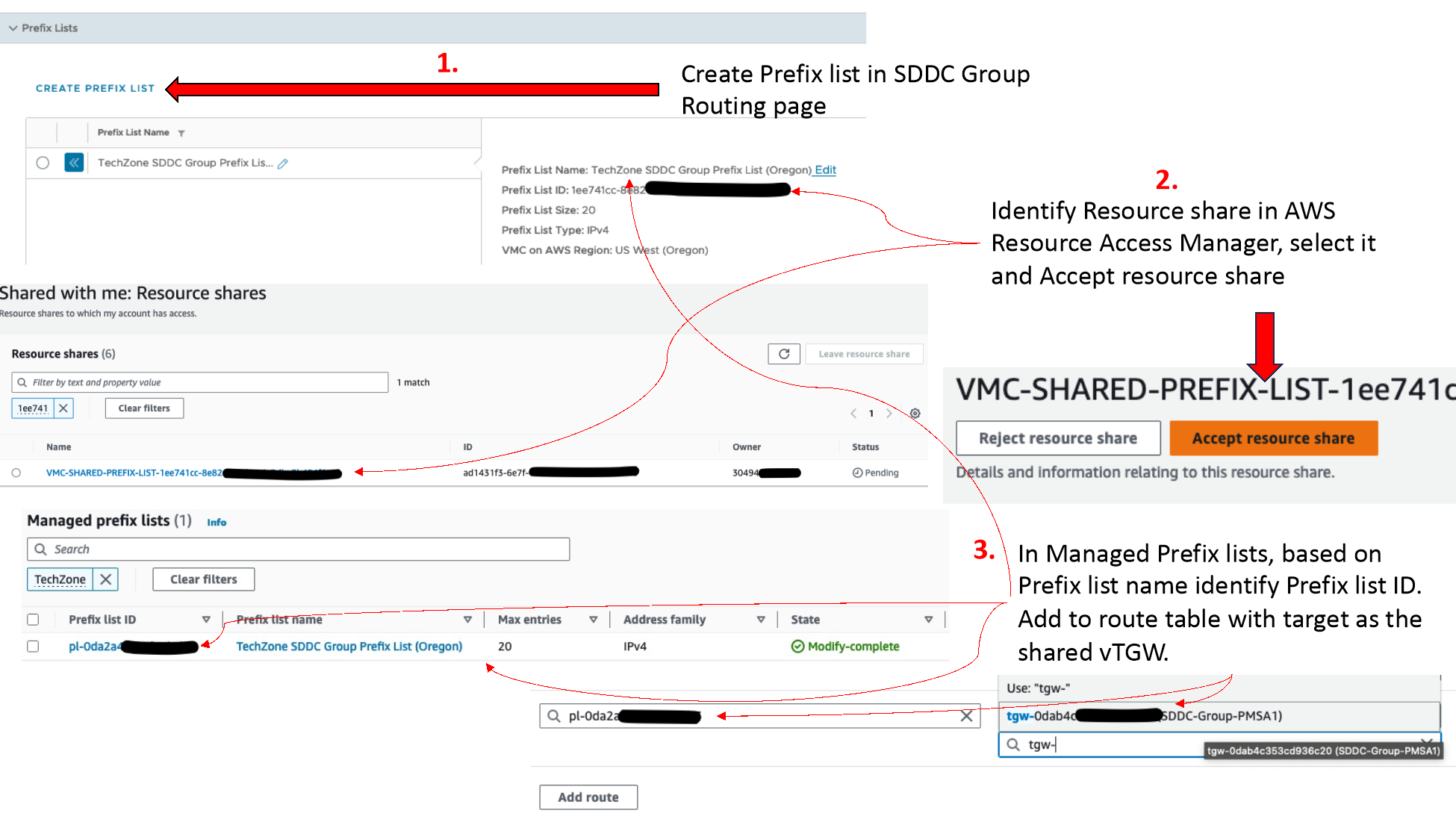

- Shared Prefix lists are created on the SDDC Group under the routing tab, by defining the AWS Account and Region to create it for. The prefix-list must then be accepted in the AWS Account’s Resource Access Manager.

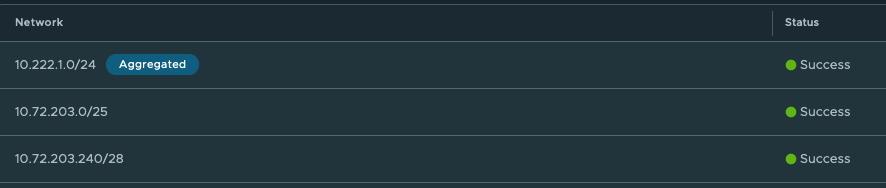

- Once attachments are shared and accepted, the routing must be configured. The SDDC Group will automatically learn VPC CIDRs from a VPC attachment, but custom routes can be added to send additional destinations to the VPC, which are configured in the SDDC Group under External VPCs. TGW routes are configured on the SDDC Group’s External TGW page, and DXGW routes are configured on the DXGW itself in the AWS account.

- Routing from AWS to the SDDC is done by adding the SDDC Group’s shared prefix-list to the appropriate route table(s) (As shown in step #3). Individual subnets can also be added to the route table but are more complex operationally as they require manual updates whenever SDDC networks are changed. The destination for these routes or prefix-lists will be the TGW attachment of the shared vTGW completed in step 2 above.

- Firewalls, Security Groups and network ACLs also must permit the traffic for communication to work. All SDDC firewalls and AWS Security groups are stateful, so the rule only needs to define the traffic in the direction it will be initiated, but AWS Network ACLs are stateless and must permit traffic in both directions.

Validation & Troubleshooting

The primary tools for troubleshooting the SDDC Group connectivity are:

The route tables for the SDDC Group, found on the Routing tab of the SDDC Group page in the VMC console. There are 2 route tables (per Region). This dual route table configuration ensures that SDDCs (Members) can connect to other SDDCs and external attachments, while external attachments are able to connect to SDDCs, but not other external attachments.

Members: This is the list of routes accessible to SDDC Group members (SDDCs). This will include all routes learned from SDDCs as well as external attachments.

External: This is the list of routes accessible to external attachments. This will include all routes learned from SDDCs, but not those from external attachments, as at least one side of all communications with an SDDC Group must be to or from an SDDC.

The Learned and Advertised routes between a single SDDC and Transit Connect, found on the Networking->Transit Connect page of the NSX Manager for the SDDC. Advertised Routes will show which routes are from Route Aggregations defined on the INTRANET connectivity endpoint

Traceflow: Shows the path traffic follows through the SDDC Group (displayed as the TGW) to its destination (although it does not continue the path inside a remote SDDC).

Authors and Contributors

- Michael Kolos, Technical Product Manager, Cloud Services, VMware

- Ron Fuller, Technical Product Manager, Networking & Security, VMware