Designlet: VMware Cloud on AWS HCX Cloud to Cloud (C2C) Migration Between SDDCs

Introduction

The HCX Cloud to Cloud (C2C) feature enables you to migrate workloads from one SDDC to another in VMware Cloud on AWS. The C2C support requires HCX “Type:Cloud” Manager installed at both the source and destination cloud environments. The HCX plug-in and operation interfaces appear in HCX-enabled Cloud sites for access to HCX services.

As indicated in the section below, it is possible to have an on-premises datacenter connecting to one more VMware Cloud on AWS SDDC for the purpose of providing highly available SDDCs to migrate the workloads or provide disaster recovery if one of the SDDCs become unavailable.

NOTE: This resource is to be updated to reflect Transit Connect. Using Transit Connect would be the preferred methodology since the increased bandwidth would allow the migration to complete much faster than DX or Internet.

Summary and Considerations

|

Use Case |

|

|

Pre-requisites |

|

|

General Considerations/Recommendations |

|

|

Cost implications |

|

|

Performance Considerations |

Direct connect will have better performance when it comes to migration as compared to internet connectivity because of the number of hops involved, latency, jitter and packet loss. |

|

Documentation reference |

|

|

Last Updated |

May 2021 |

Interconnect Topology – C2C Interconnect

See the prerequisites section in the table for establishing C2C connectivity between the two VMware Cloud on AWS SDDCs:

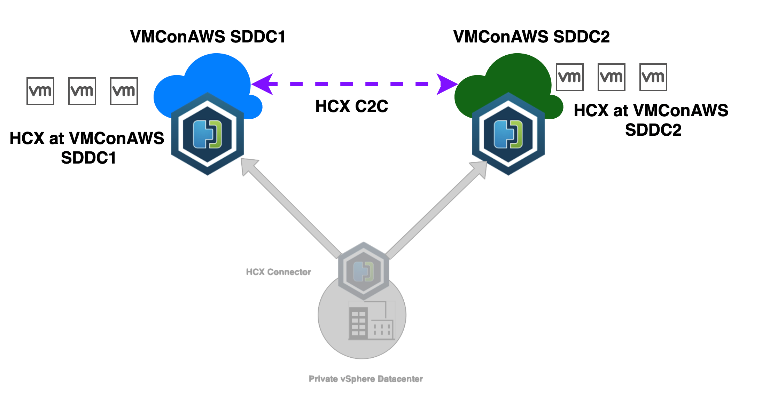

Figure 1 – Interconnecting two VMC on AWS SDDCs using HCX

As shown in Figure 1, there are two options available for setting up C2C using HCX services between VMware Cloud on AWS SDDC1 and SDDC2. These are:

- HCX Services using External IP – In this case, the VMware Cloud SDDCs have interconnectivity over External IP. The compute profile for HCX should use externalNetwork as Network Profile as shown in Fig 5 below.

- HCX Services using Direct Connect - The main difference between HCX Services using External IP vs Direct Connect is the connectivity between the SDDCs which in this case is Direct Connect that may transit through on-premises. Additionally, the compute profile will use the directConnectNetwork as the Network Profile.

- HCX Services over IPSec VPN and private IP addresses - This is an unsupported configuration and not recommended due to overhead of IPSec tunnel. The site pairing may work, but IX/NE tunnels may not come up as the packets may not be forwarded to HCX appliances.

Background

Before the HCX C2C support became available, the following was a typical workflow that one had to follow for migration operations.

- To enable HCX, the service provider (VMware in case of VMC) deploys an HCX-Cloud appliance on the cloud SDDC.

- The end customer deploys a HCX appliance (labeled HCX Connector in figure 2 below) on-premises.

Note - HCX only supported hybridity operations from on-premises SDDCs to Cloud SDDC (HCX Connector → HCX appliances), both in VMware Cloud and outside.

Figure 2 - HCX without C2C support

With C2C

With the C2C feature, HCX now supports hybridity operations between HCX-enabled clouds (VMC SDDCs). This allows you to perform hybridity operations between the two clouds (Public Cloud A and Public Cloud B shown in the diagram below). Here "hybridity operations" refers to the following:

- Site pairing

- Deploying Interconnect Service mesh

- Network stretch

- Migrations

Figure 3 - HCX with C2C support

There are many advantages with C2C, a few are listed below:

- C2C enables direct workload migration using HCX from VMware Cloud on AWS SDDC1 to SDDC2 without any need of migrating it first to the on-premises datacenter.

- C2C allows workload rebalancing, protection, and mobility across the VMware Cloud on AWS SDDCs to meet cost management, availability, scale, or compliance aspects.

- The HCX Manager at the SDDC can now initiate or receive site pairing requests and act as the initiator or receiver during the HCX Interconnect (IX) tunnel creation.

Site Pairing After HCX Deployment

Figure 4 - Site Pairing after HCX deployment

A Site Pair establishes the connection needed for management, authentication, and orchestration of HCX services across a source and destination environment.

When working with Cloud to Cloud implementations, following are the site pairing options:

Simplex site pair is like an HCX Connector to HCX Cloud relationship, where the connector is always the source. Similarly in C2C, one of the clouds can be the source when that cloud is used to establish the site pairing. Extensions and “forward migrations” are initiated at the source cloud.

Bi-directional site pair is the other option, where both clouds are site paired to each other. In this option, both clouds can initiate a network extension and a “forward migration”; a single service mesh is used in either direction.

Note: Consider the cluster EVC baseline when planning a deployment that supports bi-directional live migrations. HCX can migrate same, or older to newer CPU family without changing the EVC baseline.

Compute Profile

Figure 5 - Compute Profile with externalIPNetwork as uplink

The compute profile defines the structure and operational details for the virtual appliances used in a Multi-Site Service Mesh deployment architecture. The compute profile:

- Provisions the infrastructure at the source and destination site.

- Provides the placement details (Resource Pool, Datastore) where the system places the virtual appliances.

- Defines the networks to which the virtual appliances connect.

The integrated compute profile creation wizard can be used to create the compute and network profiles (or Network Profiles can be pre-created). HCX Interconnect service appliances are not deployed until a service mesh is created.

Service Mesh Interconnect

Once service mesh is setup as shown in Fig.6 below, ensure that the IX and NE tunnels are up.

Figure 6 – Service Mesh Interconnect

A Service Mesh specifies a local and remote Compute Profile pair. When a Service Mesh is created, the HCX Service appliances are deployed on both the source and destination sites and automatically configured by HCX to create the secure optimized transport fabric. More details on HCX Interconnect, Service Mesh, Compute and Network Profiles are available at this documentation page.

Management Gateway Firewall Rule

You must create a firewall rule in the Management Gateway that will allow a https connection between both the appliances. See Figure 7 below.

Figure 7 – Management Gateway Firewall Rule for HCX Appliances

C2C Workload Mobility

With service mesh setup and tunnels in UP state, it sets up the stage for migrating workloads between the VMConAWS SDDCs. You can leverage the supported migrations options as documented on this page.

Figure 8 - Example of vMotion Migration Configuration Setup

Planning and Implementation

Planning and implementation consists of configuration, deployment, validation and integration.

Configuration and Deployment

- With reference to Figure 1, deploy the HCX instances for the VMware Cloud on AWS SDDCs.

- Setup compute and network profiles with uplink as External IP addresses (use direct connect network in case of DX Circuits). Complete the service mesh configuration using these compute and network profile.

- Ensure that the appliances are deployed successfully and the IX, NE Tunnels come up.

Validation and Integration

- Workload migration from one SDDC to another or vice-versa should be successful regardless of the migration type used i.e., vMotion, Bulk or Replication assisted vMotion (RAV). Note the limitation with respect to RAV as mentioned under General Considerations/Recommendation section above (this will be addressed soon in upcoming releases).

- Migration across the SDDCs should be successful for both DX Circuit or Internet-based connectivity between the SDDCs.