Designlet: Accessing Amazon S3 by Private IP with the Connected VPC

Introduction

One of the features of VMware Cloud on AWS SDDCs is the ability to use the Connected VPC for low-latency, high-performance connectivity to native AWS VPC services with no additional data transfer fees. The Connected VPC is a network link from an SDDC to a subnet and VPC in a customer-owned AWS account, which is typically configured at time of SDDC deployment. When accessing Amazon S3, the default configuration routes traffic for same-region Amazon S3 public IPs over the Connected VPC path, after performing an SNAT (Source Network Address Translation) to an IP in the Connected VPC’s subnet and makes use of an S3 gateway endpoint in that VPC to provide access to S3.

While this addresses most use case cases, there may be times when we want to access Amazon S3 directly over private IPs, without having this SNAT performed.

Scope of the Document

This document will describe the considerations and design choices around using AWS PrivateLink (also called an Interface endpoint) using a private IP in the Connected VPC, which then enables private communication (without NAT) to access Amazon S3.

Summary and Considerations

|

Use Case |

Accessing Amazon S3 buckets over the Connected VPC without a source NAT may be done to enable use of Multi-Edge SDDC (which limits NAT traffic to the default Edge pair), or where the source IP of the originating traffic needs to be visible to S3 for policy or logging purposes. |

|

Pre-requisites |

To take advantage of the direct data path to the Connected VPC for Amazon S3 without NAT, you will need to create specific configurations in your AWS account linked to your SDDC, as well as ensuring that the applications or services accessing S3 are using the correct URLs.

|

|

General Considerations/Recommendations |

S3 bucket policy can be used to limit access to only traffic coming through the VPC endpoint. It can also optionally further restrict access based on the source VM’s private IP in the SDDC. Note that restricting access only based on a private IP without also limiting the traffic to the VPC endpoint is considered having a publicly accessible bucket (since private IPs can be used by any customer, it would allow access for any AWS endpoint in any AWS account. Therefore, the policy should restrict access to the VPC endpoint created in the Connected VPC at a minimum. Applications will need to access Amazon S3 using the custom URL for the interface endpoint, which follows a different format from typical Public S3 URLs. Note that S3 can be simultaneously accessed over both the Interface Endpoint using Private IPs, as well as through a gateway endpoint over the connected VPC from the SDDC, as long as the bucket policy allows both source VPC endpoints. The path taken will be based on the URL used. |

|

Performance Considerations |

By default, each VPC endpoint can support a bandwidth of up to 10 Gbps per Availability Zone, and automatically scales up to 100 Gbps. The maximum bandwidth for a VPC endpoint, when distributing the load across all Availability Zones, is the number of Availability Zones multiplied by 100 Gbps. There will be further limits based on the underlying network path – if traffic is being sent over a VPN, for example, it will be limited to the performance of that VPN. For direct VPC networking, the performance will be limited by the SDDC instance type(s) used for the management cluster and/or the VM(s) connecting to S3 over this path. |

|

Network Considerations/Recommendations |

The SDDC will only send private IP traffic over the Connected VPC that is destined for an address within that VPC’s primary CIDR. To access an S3 interface endpoint located in a different VPC or region, Transit Connect can be used by attaching the VPC with the Interface Endpoint to the SDDC group or to a TGW that is attached to the SDDC group. Note this does not leverage the Connected VPC path and will be subject to additional data charges. The return path for traffic must match the outbound path. When accessing an interface endpoint in the Connected VPC, ensure that the endpoint is in a subnet associated with the main route table for the VPC, or if the SDDC is using managed prefix list mode for the Connected VPC, the custom route table associated with that subnet must have the managed prefix list added. Traffic from the SDDC to Amazon S3 using public S3 URLs will be SNATed to a Connected VPC IP and sent over the Connected VPC if the “S3” option is enabled on the Connected VPC page. If it is toggled off, it will follow the default route to the Internet from the SDDC. When accessing a VPC endpoint URL, the destination IP will be a private IP and will be sent without NAT, following the route table of the SDDC (and use the Connected VPC if the VPC endpoint was created in the VPC’s Primary CIDR). |

|

Cost Implications |

Any traffic that is sent to the PrivateLink S3 Interface Endpoint will be subject to AWS data charges (currently $0.01/GB) plus there is an hourly charge for the endpoint itself. There may be further charges if the endpoint is in a different AZ or region from the source. This contrasts with typical Connected VPC traffic for same-region S3 using a gateway endpoint, which does not incur any data nor hourly charges. |

|

Document Reference |

Accessing AWS Services from an SDDC |

|

Last Updated |

June 2023 |

Background

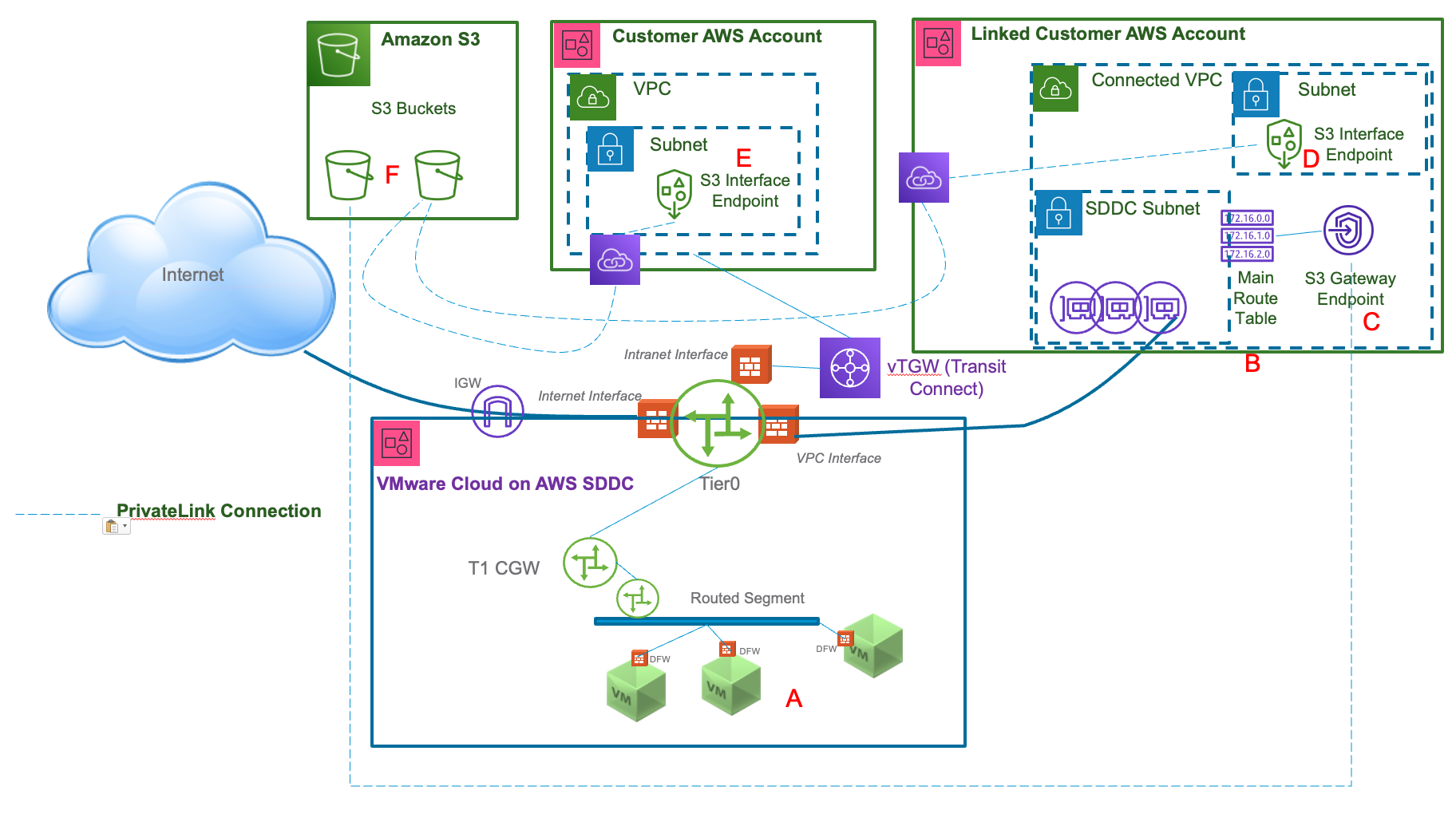

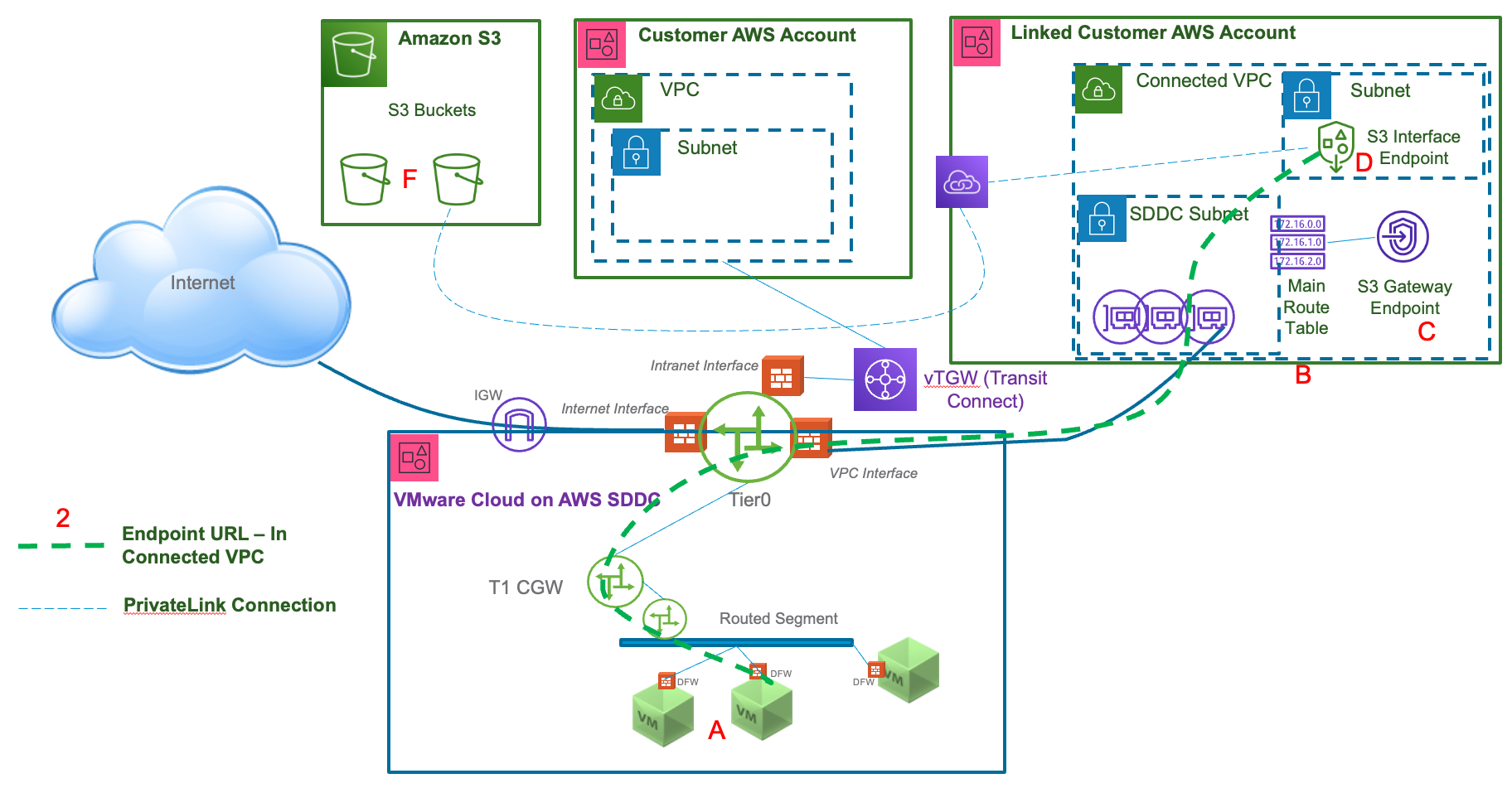

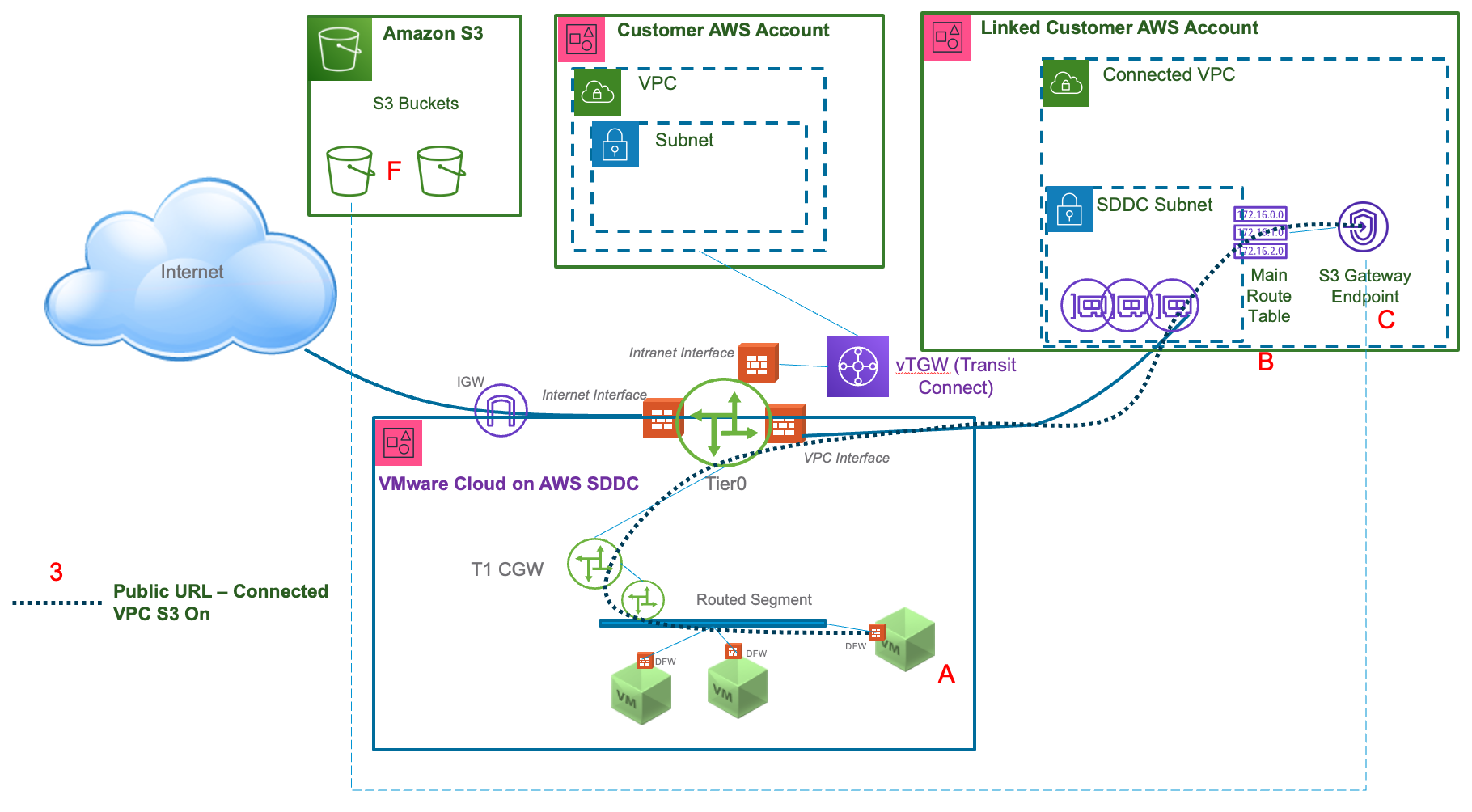

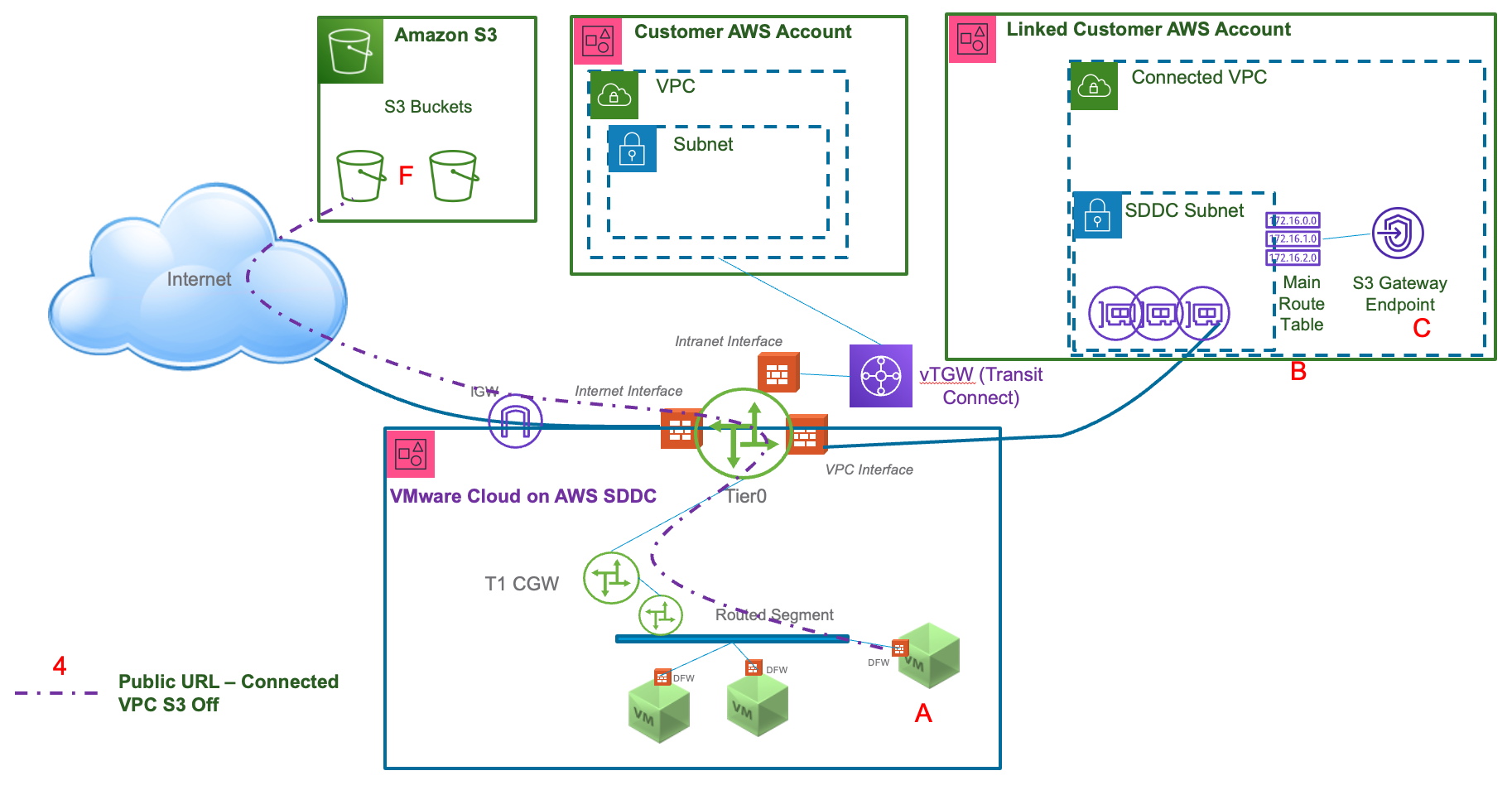

There are several different ways Amazon S3 buckets can be accessed from a VMware Cloud on AWS SDDC. The most common scenario is to access S3 through a public URL with a Public IP address. While there are still multiple security controls that can be implemented using this public access, there are some scenarios where end-to-end private IP access is required – either for security reasons, or simply to avoid the SNAT required to access a public IP. The below diagram shows 4 different possible network paths between a VM in a VMware Cloud on AWS SDDC and an S3 bucket in the same region and compares some key aspects of each one. Option 2 is the solution being described in this designlet.

Description of the components in the diagram:

- VMs in the SDDC (source of requests to S3)

- Main route table for the Connected VPC

- S3 gateway endpoint in Connected VPC (most typical configuration)

- S3 interface endpoint (PrivateLink) in Connected VPC

- S3 interface endpoint (PrivateLink) in other customer VPC

- S3 buckets in the same region as the SDDC

Comparison between the different paths shown in the diagram:

- From a VM in the SDDC to a VPC endpoint URL for the S3 interface endpoint “E”

- The destination IP will be a private IP from the VPC’s CIDR in the “Customer AWS Account”.

- The URL used must be derived from either the Regional DNS name or Zonal DNS name from the VPC endpoint.

- The traffic is shown going through Transit Connect. The VPC where the S3 interface endpoint “E” is located must be attached to the SDDC Group.

- Traffic is not subject to NAT – communication uses private IPs end-to-end.

- From a VM in the SDDC to a VPC endpoint URL for the S3 interface endpoint “D”.

- The destination IP will be a private IP from the Connected VPC’s CIDR.

- The URL used must be derived from either the Regional DNS name or Zonal DNS name from the VPC endpoint. To minimize cross-AZ charges, use the Zonal DNS name for the AZ the SDDC is deployed to.

- The traffic is shown going through the Connected VPC linked to the SDDC.

- The subnet that the S3 interface endpoint “D” is attached to must be associated with the main route table for the VPC (“B”) or if the SDDC is using managed prefix list mode for the Connected VPC, the custom route table associated with that subnet must have the managed prefix list included in its routes for return traffic to reach the SDDC.

- Traffic is not subject to NAT – communication uses private IPs end-to-end.

- From a VM in the SDDC to a S3 Gateway Endpoint in the Connected VPC "C" using the bucket's URL "F".

- The destination IP will be a public IP.

- The URL used must be the region-specific URL. By default, non-region S3 URLs resolve to US-East-1 IPs.

- The traffic is shown going through the Connected VPC linked to the SDDC.

- The S3 toggle on the Connected VPC page of NSX Manager must be “On” (this is the default)

- Traffic is subject to SNAT – the source IP will be translated to an IP from the Linked Subnet in the Connected VPC.

- The Linked Subnet must be associated with the main route table for the VPC (“B”) or if the SDDC is using managed prefix list mode for the Connected VPC, the custom route table associated with that subnet must have the managed prefix list included in its routes for return traffic to reach the SDDC.

- From a VM in the SDDC to a public S3 bucket over the Internet using the bucket's URL "F".

- The destination IP will be a public IP.

- The traffic is shown going through the SDDC’s Internet Gateway (IGW). If the default route for the SDDC is directed to another location, that path will be used instead.

- The S3 toggle on the Connected VPC page of NSX Manager must be “Off” for in-region S3 URLs to go over the Internet path.

- Traffic is subject to SNAT – the Source IP will be translated to the default source NAT public IP for the SDDC (shown on the overview page in NSX Manager. If the default route is going through another Internet connection, that connection will be responsible for performing the SNAT to a public IP for connectivity over the Internet.

Implementation

AWS Configuration

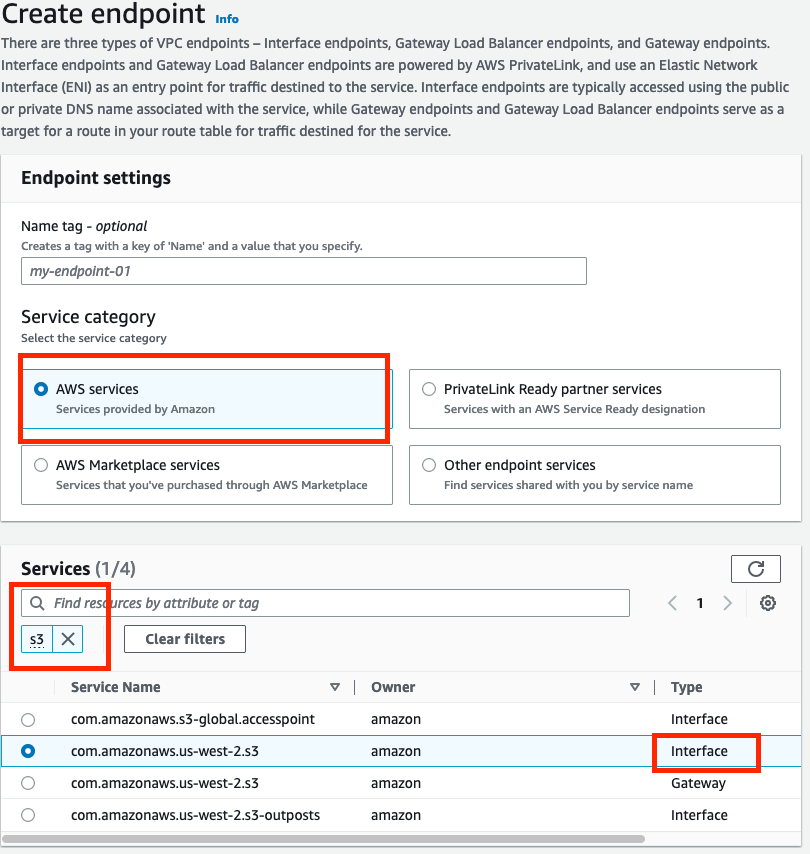

The first step is to create an S3 interface endpoint. This can be initiated in the AWS console under VPC->Endpoints->Create endpoint.

Select AWS services (the default option) and then search the services by entering S3 in the Services search box. Select the com.amazonaws.<region>.s3 service with type Interface.

Select the VPC: we want to use the Connected VPC for our design, which can be found on the Connected VPC page of the SDDC’s NSX Manager console. Under the VPC there is an Additional settings section that allows configuring a private DNS name, however that is beyond the scope of this guide; we will rely on public DNS. Once you have selected the Connected VPC, you will need to select one or more subnets. Each AZ selected will create an Elastic Network Interface (ENI) for the endpoint in the selected subnet and incur an associated connection charge. To minimize any cross-AZ charges, select the AZ where the SDDC is deployed (also shown on the Connected VPC page of the SDDC’s NSX Manager console). You can use the same subnet the SDDC is connected to if it has sufficient IP addresses available (see this article for guidance on subnet sizing), or another one in the same AZ. Select IPv4 for the IP address type. Always use the AZ ID when comparing AZs across different AWS accounts, as the AZ Name can differ between accounts. If in doubt, use the same AZ as the subnet linked to the SDDC.

You can select a security group to customize security rules on the endpoint or leave it blank to use the default security group, which is the same security group used by the SDDC’s Connected VPC ENI. You will need to ensure this Security Group allows traffic inbound from the VM(s) in the SDDC that need to connect to S3 on TCP port 443 (HTTPS). You can also specify a custom VPC endpoint security policy to further limit which devices can use this endpoint. For the purposes of this designlet, we will select “Full access” and rely on the security groups and S3 bucket policies to manage access.

Click on “create endpoint” and the creation process will start, which may take a few minutes to complete. Once it is done, select the endpoint from the list of endpoints in the console and look at the details page. Identify the DNS names and note down the AZ-specific name to minimize the chance for cross-AZ traffic (it will be the one with the letter after the region ID, e.g., us-west-2a.s3.us-west2.vpce.amazonaws.com.) You should also select the Subnets tab and note down the IPv4 address(es).

Then select the Security Groups tab, and click on the Group ID. On the Inbound rules tab, ensure that there is a rule that allows HTTPS traffic from a source of the IPs in the SDDC that need to access S3. If you have enabled managed prefix list mode for the Connected VPC you can use the managed prefix-list here, to allow all SDDC addresses access.

S3 configuration

Unless your S3 bucket is publicly accessible, you will need to modify your Bucket policy to allow access. There are many ways that bucket policies can be configured, and covering all the options is beyond the scope of this designlet, but we will use the example of allowing access from the VPC endpoint and (optionally) further restricting based on the source IP.

If you have a bucket that already has a policy in place, we can add a section to allow access if the request comes from the VPC endpoint. The VPC endpoint is restricted to sources that have access to the VPC’s private IP and are permitted through the security group. As we noted during the endpoint creation, you also have the option of defining a policy on the endpoint itself to further manage access through the endpoint.

Add a section to the bucket policy that will allow read access to the bucket’s ARN if the source is the VPC endpoint. It should look like the example below.

{

"Sid": "Access-to-specific-VPCE-only",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:Get*",

"Resource": [

"arn:aws:s3:::mybucket",

"arn:aws:s3:::mybucket/*"

],

"Condition": {

"StringEquals": {

"aws:sourceVpce": "vpce-00xxxxxxxx"

},

"IpAddress": {

"aws:VpcSourceIp": "10.10.10.0/25"

}

}

}

mybucket should be replaced by the name of your S3 bucket, and vpce-00xxxxxxxx should be replaced by the ID of your VPC endpoint, shown on the Endpoints page in the AWS Console. 10.10.10.0/25 should be replaced by the subnet of the VMs in the SDDC. If desired the IpAddress section can be omitted to allow all VMs (or workloads that can access the VPC directly) access over the VPC endpoint.

In the AWS console, ensure you’re in the same region as the endpoint we created (and the SDDC). Go to S3->Buckets and select the bucket you are using. On the permissions tab, select Edit on the Bucket policy. It’s a good idea to ensure you have a section that allows access from your AWS IAM account at the top, in case you make a mistake in the policy you could lock yourself out of managing the bucket itself otherwise.

Once you’ve added the section above to the policy (ensuring you have the indentation, brackets and commas correct for JSON format, and any errors or warnings displayed are addressed), save changes.

SDDC configuration

The only required configuration on the SDDC is to create a Compute Gateway Firewall rule to allow the traffic to S3. This rule should have a Source that includes the address(es) of the VMs that will connect to S3, a destination of the IPv4 addresses used by the endpoint that were captured in the previous step. The service should be HTTPS, and it should be applied to the VPC Interface.

Application/Workload configuration

To access S3 buckets from VMs in the SDDC, the full URL needs to be constructed based on the DNS name provided by the VPC Endpoint:

Take the AZ-specific DNS Name and replace the “*” at the beginning with “https://bucket”. Then, take the bucket name, and add it to the end of the DNS Name as a path separated by a slash “/”, and finally add the name of the S3 object at the end, also separated from the bucket name by a slash. This should result in a URL of the following format:

https://bucket.vpce-00f12fd7ebxxxxxxxxa-nwhnxxxxw-us-west-2a.s3.us-west-2.vpce.amazonaws.com/mybucket/image.jpg

If everything was correctly configured, you should be able to access the S3 bucket’s objects from VMs in the SDDC.

Validation & Troubleshooting

Once the URL is confirmed to resolve to the private IP of the VPC endpoint, the next step is to confirm the traffic is going out the correct path from the SDDC. The easiest way to confirm this is by performing a traceroute or tracepath to the IP found in the previous step. Traffic leaving the SDDC over the Connected VPC will go through the VPC uplink, which gets an IP from the Management CIDR. This IP will depend upon the management CIDR used and can be calculated by adding an offset to the management CIDR of the SDDC: for a CIDR sized of /23, add an offset of x.x.1.81 to find the Connected VPC uplink. For example, on a management CIDR of 10.2.0.0/23, the Connected VPC uplink will be 10.2.1.81. For a /20 CIDR, add the offset x.x.10.129 – e.g., 10.2.0.0/20 would have 10.2.10.129 as the uplink. For a /16 CIDR, add the offset x.x.168.1 – e.g., 10.2.0.0/16 would have 10.2.168.1 as the uplink. If you see this IP in a traceroute from a VM in the SDDC, you know it is taking the Connected VPC path. Note that the VPC endpoint doesn’t respond to traceroute, so the last hop you should expect to see is the uplink IP described above, and all remaining hops will timeout (you can typically abort the trace with Control-C.)

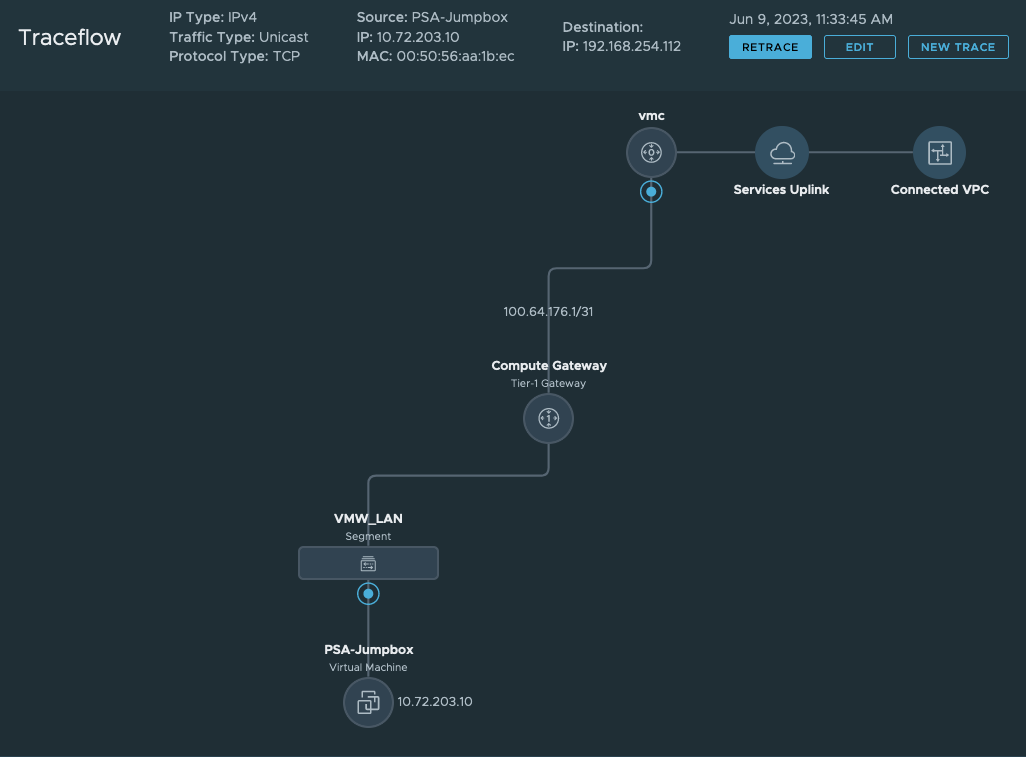

If you do not receive a response when trying to access the URL in a browser, then you likely have a connectivity (routing, firewall or security group) issue. Double check the implementation steps above and ensure the correct traffic is being allowed. You can also confirm using the NSX Traceflow tool in NSX Manager that the traffic is making it out to the Connected VPC from the SDDC.

Sample Traceflow path showing traffic going from a VM in the SDDC over the connected VPC when accessing the S3 interface endpoint IP.

If you do get a response that says “AccessDenied”, then the issue is with the S3 bucket policy that is preventing access. Double check the policy, the VPC endpoint ID, and the IP address for the VMs.

Authors and Contributors

- Michael Kolos, Technical Product Manager, Cloud Services, VMware